Index

Home ↵

Welcome to Dr. Neil's Notes

In here you will find notes made by Dr. Neil Roodyn, they include a variety of topics and this index file should help you to navigate the notes.

As you are reading this introductory note, you should realize that these are not private notes, although they are not all fully formed yet. In this collection you will find some more well thought through notes, that are closer to articles or documents, along with some half formed ideas and thoughts.

If you feel you have ideas to contribute, please get in touch with me. I will try to read all incoming thoughts and contributions.

Welcome to my notes.

Index

- General

- Software

- Social Networks

- Seamless Software

- Software Development

- Coding Notes

- .NET development on a Raspberry Pi

- .NET Console Animations

- .NET Console Clock

- .NET Console Weather

- .NET Camera on a Raspberry Pi

- .NET Web Server on Raspberry Pi

- .NET Camera Server on Raspberry Pi

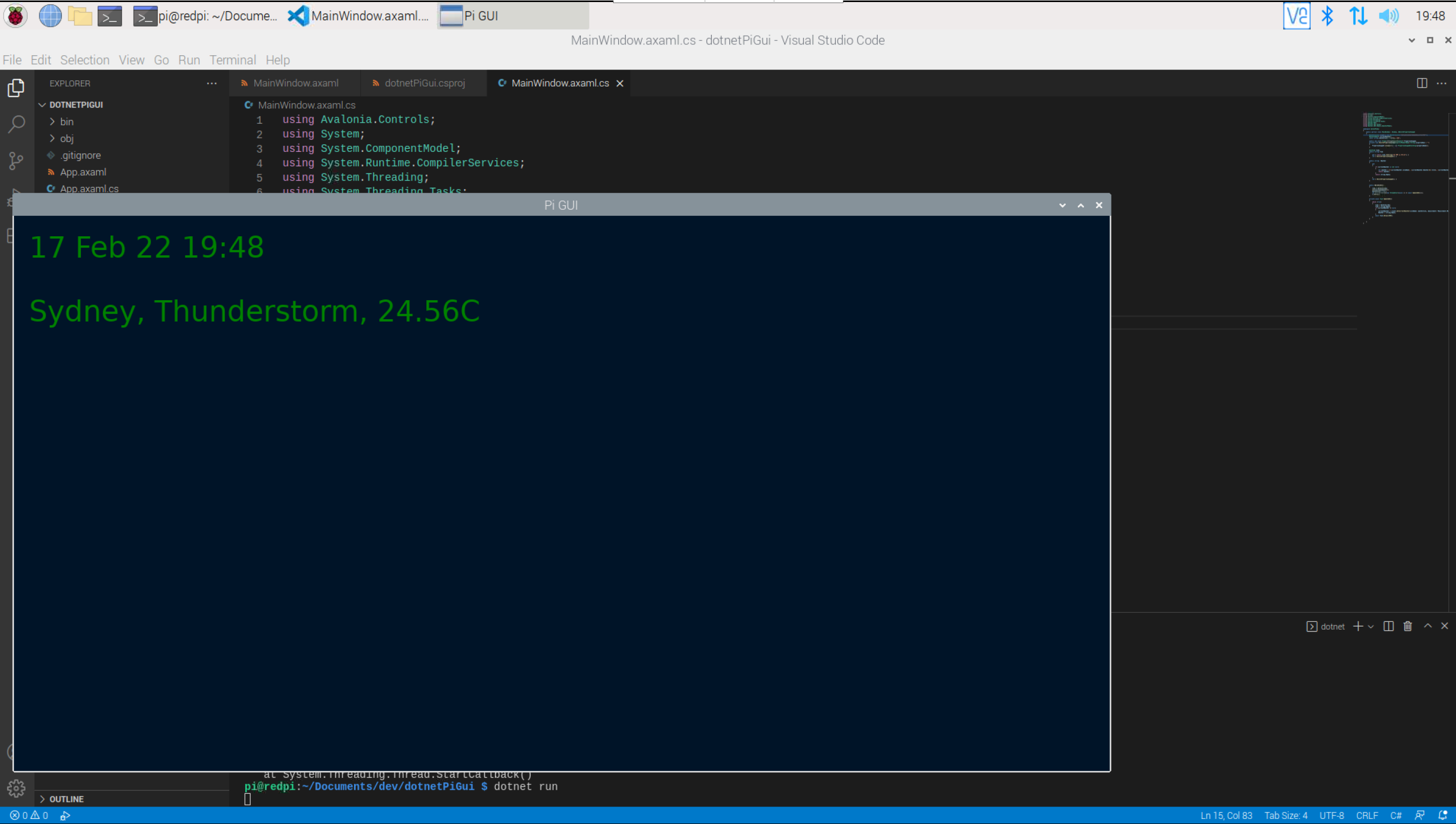

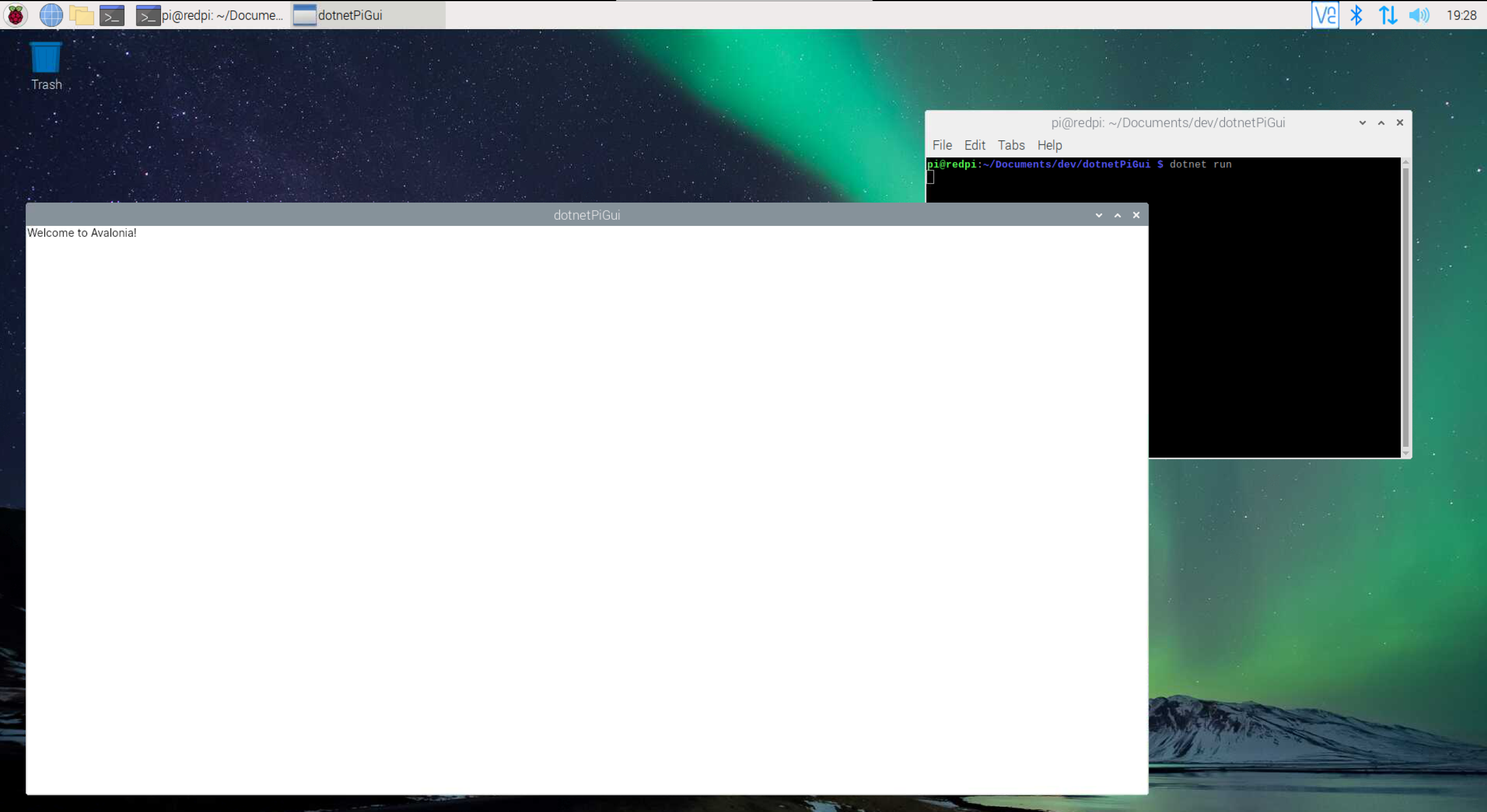

- .NET GUI application on Raspberry Pi with Avalonia

- .NET Picture Frame on Raspberry Pi with Avalonia

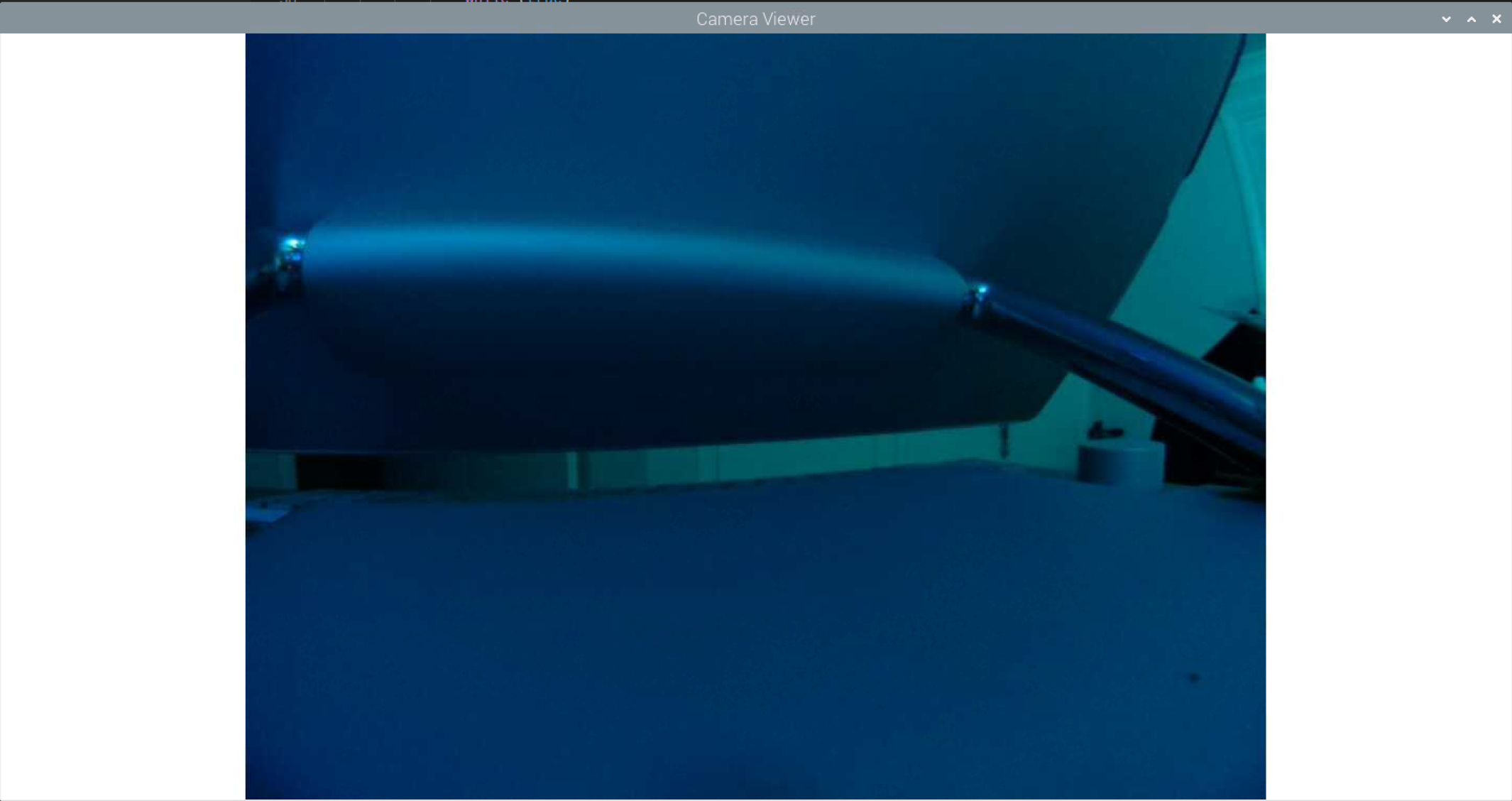

- .NET camera feed viewer on Raspberry Pi with Avalonia

- Python Glowbit server

- Azure Key Vault in .NET applications

- Using GitHub Packages with NuGet

Dr. Neil's Notes

General > People

Professionals

Introduction

Being a professional means different things to different people, however I think there are a certain set of behaviours I would expect to see from anyone that considers themselves to be professional. I have made a list below and described how I perceive the actions as professional.

Polite

There is never an excuse for being rude to people, especially when you are carrying out your chosen vocation. To be polite costs nothing, and earns respect from those around you. Customers can often be rude, colleagues at work may be frustrating, managers might infuriate you, keep your cool. A professional will always maintain a sense of composure, and respond to a situation in a polite, and considerate way, even if they are not receiving the same treatment in return. I will admit I am not always great at following this advice, I often get to a point where I push back rather than take it. It is a hard to remember that every action you take in your life can impact on your reputation, and your reputation stays with you for a long time.

Helpful

Another low cost behaviour, and one that can help you build your reputation is to always try and help the people around you. Customers, colleagues, and managers all need help at times. If you are the person they turn to, then your reputation for being helpful is good. You might not be the person to solve the problem presented, however if you can do everything you can to lead the person towards a solution then you are showing a sense of professionalism.

Considerate

Having empathy for those around you at all times shows that you are able to understand the fact that people feel differently about different topics. Something you might consider a funny joke might be offensive to other people, if you are not sure, then do not tell it in that group, and certainly don't send it as a team wide email. Being considerate is about not forcing offense upon people. While you can never prevent people from seeking to be offended by something you have said or done, you can prevent yourself from imposing that statement (or action) on people without their consent. A professional, does not have to be walking on eggshells, however they do need to ensure that their actions are not placing the people around them in unnecessary discomfort.

Record actions

A person who cares about what they do, will almost always record the actions they take to reach end results. If you are in a situation that requires interaction with other people, then recording that interaction, either digitally, or taking notes, and then following up with the people involved with a summary, and the record of the interaction is valuable. This allows people to clarify a point, or change their mind (which is always allowed). When you are working alone, then capturing the steps you take to reach a goal will help you improve, and validate you did not miss anything in the process. Records provide a history that can be returned to, long after the event has been forgotten. This is a good practice to enable processes to be repeated, and prevent mistakes from being repeated.

Communicate clearly

Clear verbal and written communication is critical for teams that are working together to become aligned with goals, and deliverables. Communication is a skill that can be learned and improved. Whatever work you are doing, being able to clearly communicate aspects of that work will help everyone understand the work. When communicating consider the following concepts; i) why are you doing this work? ii) how are you doing this work ? iii) what is the end result ? If the people you are working with (and for) can easily understand these three ideas when you explain them, then you are doing a great job of communicating clearly.

Be Thankful

Be thankful to the people you work with for actions that are taking you towards your shared goal. Explicitly thank people for the time they are committing to activities that help benefit the work you are doing. When you have a meeting (or Teams call) with someone, thank them for the time they have committed to being in that meeting. When someone shares thoughts, ideas, and questions, explicitly thank them for the input. It is not hard to say 'Thank you for that ...' to make it clear that value has been added through the contribution. When the team you work with reaches a milestone, thank each person you work with for their contribution. This ties back into being polite, and also provides positive feedback on the addition each person is making.

Dr. Neil's Notes

General > Projects

Show and Tell and Ask

Introduction

Show and Tell and Ask is the name of a meeting I organise with the project teams I work with. This note will explain why these meetings are worth doing, what happens in the meetings, and how to get the most from Show and Tell and Ask meetings.

Alignment

A project that has well defined goals is more likely to succeed. At the very least you will know you have succeeded once the goal is reached, even in part. A team that is aligned on the goals of a project is more likely to reach those goals, than a team that has many different alignments. In all the projects I have ever been part of I try to get the entire team involved in the design, the testing, and of course the creation of the product. Getting everyone on the same page is often a hard thing to achieve. Everyone has a unique set of skills and experiences they bring to a project, and this will tend to lead to people specializing in aspects of the project. Maybe someone is really passionate about databases, and they are likely to gravitate to spend most of their time working on the database. Another team member might be great at user interface design, and spend most of their time designing beautiful user interfaces. This behaviour is normal and in many ways it is great if the project you are working on allows people to excel in their areas of passion.

However this siloed work tends to leave the rest of the team blind to what is happening in other parts of the project. This leads to a situation where the project is missing out on the opportunity for everyone to contribute their thoughts, ideas, and creativity to the whole project.

The Show and Tell and Ask meetings provide a forum to realign a team on the work being done by other members of the team. These meetings allow each member to contribute their ideas and ask for clarification on areas of the project.

Sharing

During the meeting one member of the team will start by demonstrating, and presenting, the work they have been doing in the last few weeks, or months (depending on the scale of the project). This provides a chance for the other team members to get insight into the work being done by the presenter. This provides a way to understand the contributions being made by the person presenting in this meeting. The presentation should not be a PowerPoint Extravaganza, instead it is preferable to focus on the actual work done, either by demonstrating a functional component, or showing some other output of the work done. This provides a great chance for people to show pride in the work they are delivering. Sometime it is useful to have an image or animation to explain what is being presented, and in those scenarios a tool like PowerPoint makes sense, however often a whiteboard picture does the job, and provides a more interactive tool to tell a story and further explain parts of the work being done.

Something to note here is that not all teams are in a position to hold Show and Tell and Ask meetings throughout the project lifecycle. For example, there is no point in holding these meetings in very early stages of a project, when there is very little to show. Another example, is when a project is to maintain an existing product, the progress to show is usually fairly limited. Projects go through different cycles of productivity, planning, creativity, production (development or engineering), delivery, and support. This sort of meeting is most valuable during the creative and production stages of a project.

The Activity

With intention, the meeting is not recorded. As most meetings are now happening online (eg on Microsoft Teams) it is very tempting to record meetings to review later, and so people can catch up if they missed the meeting. Being purposeful about not recording a meeting provides two positive outcomes; people are less afraid to ask a question they think might sound dumb, and people are more likely to participate if they cannot 'catch-up' later. The people I am talking about that want to 'catch-up' later tend to be the managers rather than the people doing the actual work. During the meeting someone (often I do this) should capture the points being raised and discussed. A free form note taking tool, like OneNote is ideal for this.

When holding a Show and Tell and Ask meeting it is good to keep the meeting focussed to a set time. I have found an hour to be a good length of time to achieve these meetings. The person presenting takes 15 to 20 minutes to Show and Tell the work they have been doing. Then the rest of the hour should be used to Ask questions, and solicit feedback on ideas and issues. This Ask part of the meeting is the most valuable part, make sure you do not fall into the trap of letting the presenter take most of the hour to Show and Tell. The presentation is the trigger to the conversation. The questions asked might start to stray from the topic presented, this is often fine. For example, when a team member sees the details of the work presented it might make them question the work they are doing, and how it fits in to the bigger picture. Perfect. This is exactly the sort of thing I am looking for in these meetings. The cross fertilization of ideas, the spreading of knowledge, and the discussion that flows is where you can start to find the gold in your project.

Most teams I have worked with do these meetings at the end of the week, on Friday afternoon. This provides a nice way to wrap up the week, and gives the person presenting the week to get things ready. However if you find the person presenting is spending a major part of the week getting things ready for the Friday presentation then you have an issue. This meeting is to show the work you are and have been doing, not build specific output for the presentation (see the one caveat to this in the outcomes below).

Once the meeting is over the person who was capturing notes should write up the points made in the meeting, along with any questions that remain unanswered. These meeting notes should be emailed to all the people that were invited to the meeting. While the meeting was not recorded as video and audio, this email acts as an important place to share the conversation and remind everyone of the topics raised. I normally send the email, summarizing the meeting, on the following Monday morning. This allows the team relax over the weekend, and reminds everyone of the meeting on Monday along with any actions or discussions raised.

Outcomes

There are a number of positive outcomes to gain from holding Show and Tell and Ask meetings:

-

Everyone in the team gets an improved understanding of what the other team members are doing, and how other parts of the project are progressing. This leads to greater team alignment.

-

Each team member is given an opportunity to ask questions, and make suggestions about each area of the product presented. This enables the combined skill set of the team contribute to the end result.

-

The product being created by the team gets hardened to demonstrations. It is a well known fact that things are most likely to break when you are demonstrating them, the more you demonstrate parts of the product, the more issues you find in front of (what I hope is) a friendly audience.

-

The team members each get better at presenting the product, and sharing their passions for the work they are delivering. Getting good at presenting is a skill that takes practice, this meeting provides practice. Having everyone in the team able to present various aspects of the project enables the project to be demonstrated more readily, without having to wait for one person to be available.

-

The product being created by the project becomes more presentable and the team gains experience showing aspects of the product to managers before it gets shown to customers.

-

Some team members will use the meeting as a forcing function to spike (prototype) ideas in order to demo some work a bit further along the road map than they currently are with production ready output. This has only positive side effects, as it provides an exploration with the team of an idea of how something might turn out.

Dr. Neil's Notes

Software

The Social Networks

Introduction

In the last couple of years I have pulled away from using social networks. I no longer watch, or contribute to my Facebook account, Twitter has become something I occasionally use in order to contact people who only seem to respond to Twitter messages. Instagram, Snapchat, and many others have never held interest to me. I want to use this article to explain why I have stopped engaging with these platforms.

Media Driver

For me, it was important to consider the motivation behind running a media platform. Journals, newspapers, almanacs, magazines have always existed to create money for the publisher, and provide a platform to present the viewpoint of the author(s). Sometimes the author and publisher is the same person, or entity, however not always. These periodicals would historically edit the content, to match with regulations, and maintain a view point aligned with the publisher. Some publishers would refuse to print certain materials for being too 'racy', or promoting views that they considered dangerous.

People believe what they read. I am sure we have all heard the phrase "it must be true, it was written in ....". A large amount of trust is placed (almost certainly misplaced) in the publisher validating that what they produce is true, or clearly marked as fiction.

The challenge is that the truth is often boring, and boring does not sell well. People want to read something simple and exciting. The more exciting, and less complicated a story is, the more people will read it. The majority (a big generalization here) of people do not want to spend an hour reading an article in order to understand the nuances, and details, of a situation.

There is also an effect I will call the Stern Effect where being outrageous increases the audience size, because not only is it titillating content, it is also often so ludicrous that it is funny. People tuned in to Howard Stern not because he was providing valuable information, it was because he could stir people up into saying and doing dumb things that made a large percentage of the audience wonder what he would do next, and tune in to find out. This is entertainment. This form of entertainment draws in a large audience, and therefore makes a lot of money for the entertainer, and the publishing platform.

Serious objective informational media is in decline. A platform that enables, and encourages, people with different view points to publish in the same issue, is becoming rare. While more interesting, and more thoughtful, it requires listening to both sides, and having a level of empathy for both sides. This requires effort, and most people want to be entertained. Caligula knew that the gladiatorial ring is more exciting than the discussions in the senate. To get the people on his side it is far simpler, and more efficacious, to slaughter some Christians, and pay for some horse races, than have a intellectual debate on the pros and cons of some activity relating to taxes and the cleanliness of part of the city. Some things have not changed much, many countries are being run by clowns, in the truest sense of the word. They are entertainers, providing a change from the boring, stuffy conversations. The clown simplifies the challenge to something that most people can understand, even when untrue. The clown is not there to provide truth, the clown wants to misdirect your attention, and then surprise you with something silly and funny.

Back to the topic of social media platforms. These are owned by stake holders that want to increase revenue, if more people want to see cat videos, then that is what the platform shall promote and provide. If the antics of clowns misdirecting your attention, and making you laugh, helps sell advertising then that is what will get promoted further. These platforms have no vested interest in presenting you with a complete world view, where you have to stop and listen and think about multiple sides to a situation. Facebook, Twitter, and countless other platforms, want you to click, scroll, click, click, scroll, exposing you to more advertising, the only (or major) source of revenue they have.

The customer for an advertising company is the advertiser, the company promoting their product. The size of the audience, along with the ability to target specific type of people within that audience, is the product they are selling, to their customer. If you use FaceBook, Twitter, YouTube, or countless other platforms, then you are part of the product those companies are selling.

While in legal terms, you agreed to this, it is somewhat analogous to agreeing to be on the crew of ship after being press ganged at knife point. The choice was not exactly made clear, and you are not given an option to use the platform under different constraints. If you have the patience, please read the terms and conditions, which you agreed to when you created an account, on any one of these platforms. You will discover that you have agreed to a slew of constraints enabling the platform to determine what is presented to you and when.

The objective of each of these platforms is get as many people spending as long as possible watching their screen, repeating the click, scroll, click, click, scroll, scroll behaviour. All the while consuming the advertising that is being pushed alongside the sweet candy of titillation and clown shows. The addiction of the variable mini-dopamine hits experienced, is by design in each platform. They have hooked, and enslaved millions of people to the countless channels of entertainment, disguised as information. This might sound like a strong statement, however consider how much time you have spent on these platforms in the last week. Did you schedule that time in your calendar to consume advertising ?

This is not new, it is simply scaled up. In the 1990's I made a conscious decision to not own a television, I did not want to be the consumer of advertising and biased view points. I also felt my time watching TV was not well spent. I would go to the cinema to watch movies on a regular basis, often once a week, however TV seemed pointless. I wold prefer to spend the time I had available, reading, writing, cycling, in the gym, or sleeping. Twenty years later and the rise of social networking platforms was an interesting phenomenon, I participated out of curiosity, and it become a good way to keep in touch with friends all over this little planet. Prior to the rise of the social network platforms, I tried sending out group emails, blogging, and podcasting. These are broadcast mechanisms, and the feedback is in a different time frame to the social networks. The rise of notifications in software, that flag when something might be interesting to you, along with a wider reaching internet has taken us to a place where people expect responses in seconds, minutes, or at most hours, not days. Combine this with devices that you keep with you at all times, drives a new set of behaviours. The social networks take advantage of all of these vectors.

Your phone and the social networks

The timing of the smartphone becoming more popular, and the rise of the social networks is not coincidence. They are symbiotic in nature. The internet connected phone enables you to post and consume content from almost anywhere, at anytime. The social networks need more people to engage throughout the day in order to keep driving those dopamine hits. If you could only access Facebook, or Twitter when sitting at a wired-in desktop computer, I am almost certain the platform would have failed to reach the level of success, and value, that exists today. Interestingly the success of the smartphone is also tied to the rise of the social network platforms. If your phone did not have an application that let you share, and consume, on your favourite social platforms, what else would you use it for ? Making phone calls perhaps? Listening to music? The smartphone revolution was, in part, fuelled by the adoption of the applications that enabled you to scroll, click, click, scroll, scroll, all day, from anywhere. Now you are part of the product consuming advertising from the bus, while walking, from the sofa while half-watching TV, anywhere, anytime.

The politics of media

All this adds up to a set of hard to manage distractions, taking your time from the traction you are attempting to achieve in your life goals. The strong willed may laugh and say they can manage this. Some people might even schedule 'social networking' time in their calendars. If all that these platforms did was push cat videos (or the equivalent) into your focus, it would not be that bad, would it?

However there is another (darker?) side to this ability to target an audience with specific messages, the political side. This creates tribes of people, that are willing to believe the same message, and will never (or rarely) hear another message. This provides certain customers of the social network platform to target vulnerable groups with messages that will stick and get reinforced by the platform. The platforms remove the ability to have open, moderated debate. Open and moderated debate is the cornerstone of group decisions and tribal understanding. Some might say it is the cornerstone of democracy, however I am not sure that is true. When the customer of the platform is a political movement, promoting their messages, to the audience they wish to reach, echo-chambers are created, where the message bounces around between people who buy into that point of view. The fact that the most acceptable messages are short, and easy to understand, leads to a simplification of the true matter at hand. It is far easier to blame a group of people for a certain situation, than understand that the whole system in which everyone exists is to blame for the current situation in which we find ourselves. The world is far more interconnected than ever before, in part due to the underlying technologies enabling the platforms being discussed here. We are a global species, everything we do has impact on people all over the world. It is not possible to pull up the drawbridge and operate in the modern world cut off from the rest of the world. Yet the social network platforms enable this isolationist lie to proliferate, Trumpism, and Brexit, only succeed by reducing the whole picture to simplified 200 character messaging and videos that are 5 mins long at most. The whole picture is not provided, the voter is not being given all the information they need to make a complex decision that will change the fortune of their country on the globe.

It is ironic that the goal of many of the social networking platforms is to connect everyone together, has become a tool to drive the division of more people than we have seen since the cold war.

Buying In and Dropping Out

I bought into the Facebook view of the world at the beginning, I remember meeting some of the initial folks at The Facebook as they moved into their first office in downtown Palo Alto. There was a high level of excitement, they were connecting the world together. These seemed like good people, with a positive mission, to make the world a better place. The application of technology, to help people connect, fantastic I thought. I set up my own account as soon as it broke out of the education account only model. I convinced other friends to create accounts, it grew very fast. The obvious term is the network effect, the more people connected, the more valuable it becomes.

Jump forward ten years and the young folks with the vision of connecting everyone together (who are still around) are driven to increase revenue and build a business of value. The stake holders (share holders) demand profits. When the majority of profits are generated from advertising, the pressure to drive the addictive behaviour of the users (yes just like drug users) is increased. The Stern Effect helps provide this, outrage, and divisive messaging grabs the attention of the user to benefit the customer (advertiser).

Once I started to see this platform being used to drive more division, than I felt was acceptable, in a world that needs a global species attitude to survive, I dropped out.

Will I return? At this point I cannot answer that. Technology is fast evolving and new ideas get introduced all the time. I would like to see the platforms adopt a true global species view, and prevent single viewpoint conversations from proliferating without including multiple points of view, and provide a forum for honest fact based debate. Then I might consider returning to the conversation.

Ended: Home

Software ↵

Software Notes

This section of Neil's notes contains notes on the topic of Software.

Notes

- The Social Networks

- Coding Notes

- .NET development on a Raspberry Pi

- .NET Console Animations

- .NET Console Clock

- .NET Console Weather

- .NET Camera on a Raspberry Pi

- .NET Web Server on Raspberry Pi

- .NET Camera Server on Raspberry Pi

- .NET GUI application on Raspberry Pi with Avalonia

- .NET Picture Frame on Raspberry Pi with Avalonia

- .NET camera feed viewer on Raspberry Pi with Avalonia

- Python Glowbit server

- Azure Key Vault in .NET applications

- Using GitHub Packages with NuGet

Development ↵

Development Notes

This section of Neil's notes contains notes on the topic of Software Development.

Notes

Dr. Neil's Notes

Software > Development

Access Granted

Introduction

Imagine living in a world in which you are locked out of accessing information, knowledge and experiences that the majority of people in the world take for granted. You cannot access most of the news, social media feeds, sports games, or computer games. You might think I am asking you to imagine being a prisoner, locked out of the normal world. No. The position in which you find yourself, is one that is shared by many people because products (in this case digital products) are not designed for you. The majority of people are different, maybe they can see better than you, or they can hear notifications that you cannot, or colours that look identical to you, appear to be different to them.

We Are Responsible

This is the place we put many customers because we do not build our software products and experiences to be accessible to anyone other than the 'average' or 'normal' person. Products are excluding people from accessing the riches offered by the digital world because the developers have not put a priority on making sure those experiences are accessible to everyone. Yes, I fully understand this is often considered a business decision. However I would counter with the fact that you could consider quality and security as business decisions. If you are a craftsman, building the best product you can build would you allow the business to dictate that the quality does not matter ? I am sure there businesses operating that consider security a lower priority, until they get hacked and the entire business is held to ransom.

Consideration

Someone involved in the creation of any product should be considering how to make that product the greatest possible version of that product. In the software development world building quality, and security into the product are given, however ensuring the product is accessible to as wide an audience as possible, is often overlooked. You might hear comments such as, well most of our users are normal and we do not get any value out of supporting people who have bad vision, poor motor skills, etc... This is simply lazy. Most modern operating systems and web browsers have features designed to help make sure applications, and web sites, are more accessible. As a software developer the first thing we should be doing is ensuring we do not break any of these accessability features. The guidelines are provided by Apple, Microsoft, Google.

Proposition

In the last couple of decades every developer has been encouraged to think about building high quality products from the start, using techniques such as Test Driven Development. As all systems are now connected (in some way) to all other systems, security and protecting your product from misuse has become something developers have had to learn how to achieve.

I propose that now is the time to put accessibility alongside the quality and security of all products. It should be a given that digital products are as inclusive as possible and do not exclude a large portion of the audience from getting advantage from your creation.

Homework

Have a look at the following websites to understand how the major software companies are supporting accessibility in the environment you are building your product.

Apple: Accessibility for Developers

Google: Accessibility For Developers

Dr. Neil's Notes

Software > Development

Don't Gamify My Craft

Introduction

There is a behaviour happening in the business world, and that includes the software industry, that I am not convinced drives a good outcome for customers.

The software product experience

Software is a product that, when created well, delivers a beautiful experience for the people that work with it. I am sure you can think of a digital experience you have had that felt magical the first time, and still leaves a feeling of satisfaction when you use that product again. At the same time I imagine you have had many more average experiences with products, that leave you wondering what the people who built that software were thinking.

One thing I can say with certainty, great experiences in a software product do not happen by chance. Great software is designed that way. The people building that software have an experience they want to deliver, and they work hard to ensure the customer gets the desired experience. There is a sense of craftsmanship that the developers and designers put into the product with the aim of delivery of a product they can be proud of. Telling people 'I worked on that' when someone enjoys the product is highly satisfying.

I have a theory that I want to share here, the gamification of a craft acts in opposition to pride in the work you do. The type of person that wants their work to be gamified, is the type of person that is less likely to have pride in their work. How can I say that ?

Someone that has pride in the deliverable they are creating, is not doing it for points, they are doing it for their own satisfaction, and the experience of their customers. The output from a person is directly represented by the motivations that drive the deliverable. The output from a team, directly reflects the motivations of the team.

A product delivered by a person, or team, motivated to get points in a game system, will reflect that motivation in the experience delivered.

It is entirely possible for a product to deliver a great experience, while the people building it also can get points in the game they are playing. However, there will always be circumstances where a decision is going to be made to either focus on getting points, or delivery of an experience.

Collecting Treasure or Cleaning the Kitchen

One of the challenges with gamifying a system is that you have to account for all the things people do not want to do. In order to encourage people to do something they might not otherwise do, a points system is created that will reward a person for doing that task. If the system does not cater for a scenario, then in the game world, there is no benefit to performing a task in that scenario. On the other hand, when a person is motivated by doing something that leads to a good outcome for the product they are more likely to tackle the tasks that are uncomfortable, or less desirable. Let's look at a simple example situation. For the sake of this thought experiment consider this approach is taken from the start of a new project, so there is not debt to pay (in the real world there is always outstanding debt in terms of software already delivered needing to be supported).

Each developer gets measured by how many features they complete (+10 points each), and how many defects get attributed to code they create (-1 point for each defect).

There is a strong incentive to finish features, and make sure they have a low number of defects found. This sounds like a great setup initially, and I can imagine managers signing off on this system to measure the developers. Some managers would get excited enough to set up a leader-board and start driving competitive behaviour to be delivering the most features and the least defects. What could go wrong ?

What is the incentive to make the code more maintainable over time ? As a developer you would have no motivation to do a large refactoring of the code in order to reduce the complexity. In fact, as long as you are one of the few developers that understand the complex code, you are going to want to make the code more complicated over time. More complicated code will slow down the other developers from getting feature points, and if you feel confident you can work with it, then you can get more points by delivery of more features and less defects.

What happens all too often is that people who should be on the same team start competing with each other. This is not good, as sabotage is often a great strategy to ensure a competitor fails. If you want to stay near the top of a leader-board then it is smart to make it harder for the people lower on the board to succeed. If you are near the bottom of the board then a great strategy might be to spend time each day looking for defects in the code of those that have the highest scores. Even better would be to build something that causes a defect to start appearing in their code and not yours.

Something to consider at this point is what is the objective of each developer in the team? They have stopped caring about the product and instead now care about the points they are getting. The product produced will reflect this.

Building Great Products is a Team Activity

If your objective is to deliver a great product, then you need a great team. Great teams, deliver great products. Attributes of a great team include an alignment of goals within the team that match the goal of the product. If team members are competing with each other, then they cannot work well with each other to deliver the best product, as a combined force. The output of a group should be greater than the output possible from any single individual, in that group. Solutions generated by a group should bring the combined intelligence of the group to solve problems. A great team consists of individuals that work together to build great products.

Dr. Neil's Notes

Software > Development

Scaling Development Teams

Introduction

Along with underestimating the size of projects (or maybe because of), one challenge I repeatedly see in software development teams, is underestimating the number of people needed to accomplish the desired goals. In this note the goals implicitly include a time frame, not only the deliverable outcomes.

Note: this is discussion on when, and how, to scale a development team, and does not cover the act of recruiting of people into your team.

It takes an army

Have you watched all the credits for a movie and wondered why so many people were involved ? If so, then you are getting a glimpse into the reality of creating a great product. Software (and sometimes hardware) projects can provide the illusion that a handful of smart people can deliver a billion dollar product.

Why then do companies like Microsoft, Google, Meta, and Amazon (all successful billion dollar software companies) employ thousands of developers ? If you are thinking it is because they are not as smart as your team, the chances are high you are wrong.

While it is possible to deliver a great, and successful, product with a tiny (less than 20 people) team, it is the exception, and also very very rare.

Most software projects worth doing (the product makes a noticeable dent) will require growing a larger team. However be cautious about growing too quickly.

Create a Map

Before you recruit an army to help conquer the development mountain, make sure you have a clear map to guide the new recruits in the correct direction, and to the top of, the right, mountain.

Creating a map requires a small, tight knit, team, and often takes many months. The small initial team should be doing the experimental work that validates the route, plotted in the map, can be followed, and the development project completed. This map might change several times in this process, it is highly likely that the direction, and goals, change during this first map making phase.

This, map making, is the foundational work. At some point during the map making it will become clear that the direction is now set. This is the time to scale up the development team.

Measure Output

In order to scale successfully, be careful not to measure the output of individuals, instead measure the output of the entire team. Measure the total team output and velocity.

Measuring the output of individuals will restrict growth of the team. Mentoring people, and supporting new starters, will reduce the output of individuals. The team should be focused on increasing the total output of the team over time. If the team uses Sprints, or some form of short time frame milestones (and you should), then each week, fortnight, or month, track the output from the team in terms of progress along the map, and delivered value.

Once a team is aligned to increasing the overall team output, then the dilemma of supporting new recruits dissolves away.

Capture Knowledge As Content

As new people join a team, they will ask questions, in order to understand what is being created, and how the work is being done. Capture these questions, and the answers, in a document, or wiki, that will help the next new people get going faster. As this content grows, it will become a self-serve new starters guide. This new starters guide will enable faster onboarding of new people.

Identify Mentors

As new people join the team, some of those people will be natural mentors for future new starters.

Imagine you have a small core team of ten people, five of whom are capable of mentoring new recruits. In order for the team to double in size, each of those five mentors will need to support two new starters. This will certainly slow down the output of the five mentors, and initially the entire team output. However after a couple of months the total team output, with twenty people, should be noticeably higher than with ten people.

Out of the ten new starters identify the five people most capable of mentoring the next wave of new starters. Now the team has ten mentors and could potentially recruit twenty new starters. Six months into this scaling process you could have forty people in the team, and be capable of doubling again.

Output does not scale with the team

This probably should not need to be said, however here it is; the output of a growing team will not double when you double the size of the team. An increased team size should be able to achieve more than a smaller team. The output does not scale linearly with the team size. As a team grows there is more overhead, in terms of communication costs, and alignment costs. To keep a large team aligned takes work from everyone.

A note on standards

This relates to the Agility from Diversity topic. At a small scale, ten to fifty people, having well defined standards for both the production and process, will help the team go faster. This is not the time to argue about 'tabs vs. spaces' or 'where curly braces should go', follow the standard to increase collaboration and velocity. However as a team grows, it will split into sub-teams, each sub-team will have different (albeit aligned) goals. Allowing each sub-team freedom to define their own process and standards will enable them to go faster, and be more agile. If you try to apply a single development process for thousands of developers all with different targets, scaling becomes far harder, and potentially impossible. The important point is to ensure the sub-teams are aligned with the bigger goal, and each sub-team output is measured.

Dr. Neil's Notes

Software > Development

Debt Collection

Introduction

Every successful product carries debt. The moment you start shipping a product to customers, you are creating debt. If the product succeeds it will need to be maintained, and supported, for a period of time, even if you release newer versions, the old versions will not vanish. Code I wrote at the end of the 1980's is likely still running somewhere, on some machine, in a system that is being supported by someone. Sorry, not sorry.

Leaving garbage on the floor

When you are at home, or your work place, and you see some garbage on the floor, a discarded wrapper, or an empty drink can, do you leave it there? Do you pick it up and throw it in the recycling? Do you expect someone else to deal with that?

If you are the person that picks it up and deals with it when you see it, then you will have less work (less debt) when you clean the house (or workplace).

If you expect someone else to deal with it, then you are following the SEP principle. SEP stands for Someone Else's Problem, this is common in the work place, hopefully less common at home. Once you have SEP, you have debt mounting up. As with financial debt, the faster the debt is cleared, the less interest you need to pay on it.

Paying Interest

With product (or technical) debt, the interest comes in several forms. Some of the interest payments will make the debt grow faster. For example to add a new feature to an existing product might require considerable rework of the existing product. To avoid that rework a clever hack is found, and still get the desired outcome. These hacks often create more debt as they compound. The product becomes a collection of duct tape and string holding things together to make things work. The cost to remove the hacks, and get the work done properly, is now higher.

Interest will need to be paid for any product that requires security. Most software products require security, as they need to connect to networks to operate, and that connection needs to be secure. Security is an area that needs constant attention, what was secure 2 years ago is less secure today, and likely not secure at all in five years.

As a product ages the tools used to build it will age, if the product is not updated to work with modern tooling, then the interest payments come in the form of slower builds, and slower run-times, than you would get from more modern tooling. The distance of a product from the latest development tools is another form of interest being charged on the debt.

So what?

Many products (especially software) are held together by this continual patching and hacking, to keep things working. It still works, people even pay for it, so what is the big deal? The value of most software products is based on where they can go next. Software that stagnates will fall out of favour, and eventually will be insecure, or fail to even load on modern operating systems.

If you want people to keep using your product you need to keep updating it. To keep updating it gets more and more expensive when you have less robust architecture underpinning the product. Each time you walk past something broken in the code, you are ignoring the increasing debt.

As a product ages, you still need to maintain it, support it, and enhance it. That work requires people to do the maintenance, support, and enhancements. The longer the product is around, the older the core code base, and the tooling used to build it, the harder it will be to hire people that have the skills to work on the product, or want to work on the product. The interest payment now comes in in increased cost of doing the work to move the product forward.

Clean as you go

The solution is to clean as you go, when you see garbage on the floor, pick it up and deal with it, immediately. This will reduce the technical debt, and the interest payments. The longer you leave the debt, the harder the work to remove the debt. Spending the time to do the rework that enables the product to maintain a robust architecture, will make the next enhancement easier, and cheaper. Keeping the product updated to work with the latest technologies and tools, will take less work than attempting to update after skipping several versions. The distance between each language, and tooling update is always smaller, and therefore less work, than when waiting to upgrade the product after skipping multiple versions.

In a large code base, when you find something that can be updated, or fixed, fix it, and also fix all the other things that have the same issue.

For example, you discover a control being used has a new version available. You want to use that new version for a feature you are working on. First update all the uses of that control in the code, not only the one where you want the new features. If you do not do this, the code will end up with several different versions of the same control being used, this is debt with plenty of interest payments. When you update one thing, update all the 'same' things.

Dr. Neil's Notes

Software > Development

Leading Software Development Teams

Introduction

A topic of discussion that keeps repeating is how to lead a software development team. Often the words used are different, and the semantics is important. The discussion often starts focussed on managers who seem to be struggling to manage the software team that reports to them. When I hear this I understand the problem almost immediately. What most companies want, from a software development team, is the outcome of high quality software, that can scale. It is then curious that, to achieve this desired outcome, the focus is placed on the details of management, process, and control, rather than the outcome desired. In this Note I will describe some of my observations working with software teams for over thirty years.

The Management Myth

In the introduction (above) I intentionally italicized the word manage, as I believe this is the first problem with the semantics of how we describe the act of helping a software development team achieve the desired outcomes. How do you manage creativity and innovation? I propose you cannot manage creativity and innovation, however you can lead people to be creative and innovate.

One challenge I have observed, is that people, who do not love the act of creativity in software development, see their career path as moving into management. These people are looking for a j o b where they can pretend they are in the software development business, and yet not be involved in the creation of software. Another challenge that compounds the previous challenge; people who do enjoy software development that are looking to move their career forward, see that the higher salaries go to managers who do not write code. The obvious conclusion is then to become a manager, and stop writing code.

Occasionally I hear managers proudly state 'I have been a manager for 5 years, I do not write code anymore'. What I hear is 'I do not like building software, so I followed the Peter Principle, and got promoted out of something I am never going to be good at'. The trouble is these same people, who are not passionate about the creation of great software, are now supposedly managing people to achieve something the manager does not care deeply about.

A good software development team leader is never too senior to write code. I have observed this repeatedly in the companies I have worked with, from start-ups to large software companies, the best leaders of software teams (and sometimes big tech companies) keep their hand in the development process. They write code, and review code changes.

I have never observed a great manager of a software team that is not hands on. Please do not fall into the trap of believing creativity and innovation can be managed by people not actively involved in the creativity and innovation.

Generals are not Leaders

The generals sit a long distance from the front line, getting reports of wins and losses, and then making decisions as to who will die next. This is a terrible model for motivating and supporting a team of creative, intelligent people to achieve their goals. Many managers seem to have an aim to be generals.

Supporting a software development team requires a leader that is sitting with the developers, in the trenches, dodging the same bullets they are dealing with each day. The developers you are working with should know you have suffered the shared pain of building the product. A good leader feeling the same pain as the developers, each week, will be actively looking for ways to remove the pain for everyone. The bad leader will look for a way to remove the pain for themselves only, often this is achieved by not being actively involved in the software development process. This person is becoming the general that is loosing touch with reality.

To be a good leader in a software development team, be part of the team, not an outsider. Actively be on the same side as the developer to get things done, get hands on with solving the problems. A good leader is building (compiling) the software multiple times a week, doing code reviews, and actively contributing to the code base.

Motivated and Intelligent

The role of a leader in a software development team is to create an environment that attracts intelligent creative people, supports those people to do their best work, and motivates the team to deliver the required outcomes.

Intelligent and creative people like to work with other intelligent and creative people. Software developers want to know the people, they are working with, are helping the team move forward, towards the goal of delivering the next feature in the product. People that are not actively contributing to the success of the team, are never going to get respect from the software developers working to make the product better.

There is always going to be a certain overhead for each software development team in a large organization, reporting upwards on the progress, PowerPoint programming. However this should never become a full time job for someone leading a software team. If you are an intelligent and motivated contributor to the team, you can be a better leader.

Programmers are People

The intelligent, creative, people building great software are human beings. Any manager who talks about people as resources will lose all respect from the people they claim to be motivating. Software development is a team activity. The team is made up of people, each person has their own personal goals and motivations. A good leader allows people to be their best selves, in the team, and align the personal objectives of the team members with those of the team, and the company.

Treating creative people as movable (or replaceable) resources that can be redirected to work on any other part of the software development, at a moments notice, is to deeply misunderstand how great software is created. If you treat software developers the same way a fast food chain treats staff, it will lead to the equivalent to fast food in the software product. It will not be a great product.

Team = Software.

Software = Team.

The software created will always be a direct reflection of the team, and the people, creating that software product. A dysfunctional team will build dysfunctional software. A functioning team, is made up of people that work well together to deliver a shared vision.

Macromanaging not Micromanaging

The shared vision is important to get the best outcomes. Some hands-on managers lean-in to micromanaging, trying to control the process, and mechanics of how every line of code gets written. This micromanaging does not scale well. A great software leader can perhaps micromanage fifty to one hundred developers. Most leaders will not get far past ten people. The reason micromanagement often happens is because the leader does not believe the people in their team understand how to deliver the correct outcomes. This is a trust issue, that will, most likely, be validated, because no one gets everything right all the time.

A better approach is to move towards macromanaging. Start with the big picture and make sure everyone on the team understands why the work is being done, and the goals of the project. Then work with each person to find out how they are adding value to that team goal. Systems like OKRs (Objectives and Key Results) can help here. The team should have a set of well defined objectives and measurable results. Then each person in the team should define their own personal career objectives and the measurable results. The objectives and results of each person should contribute to the team objectives and results. If they do not, then you have a challenge that needs to be solved, maybe by moving people into a team where they can align their objectives to the team objectives.

Once you have set objectives and measurable results for the team, and the people in the team, get out of the way, and get other things out of the way. The role of the leader is to support the individuals to hit their objectives, with the knowledge that doing so helps reach the team objectives. This is not a once-a-year activity. Tracking the progress for each person and the team should be done monthly, or at most quarterly. Working daily directly with the team to support the objectives allows a leader to keep their finger on the pulse of progress without the need to micromanage.

Macromanaging has the desirable effect of giving each person in the team an understanding of the bigger picture of how their work contributes. Micromanaging often leads to developers not understanding how the work they are doing contributes to the team, or company, goals.

Dr. Neil's Notes

Software > Development

Software Bill of Materials

Introduction

No innovation happens in isolation in the software world. Software builds upon what came before. Software also drags along historical artifacts, for example the floppy disk as a save icon. As the complexity of software grows, and the interconnectedness of software increases, so does the reliance on shared technology. Consider the entire world wide web, relies on a set of protocols for data transfer that are shared by every single software application that access the world wide web.

In order to reduce the need for every single software application to rebuild an implementation of the basic building blocks, many code libraries are shared across thousands (or millions) of software applications. Each software application that has any level of complexity relies on code libraries, platforms, and frameworks written by other people. The full list of dependencies for a software product is known as the Software Bill of Materials (SBOM).

Licensing

Some of the libraries being used by software are commercial, and require a payment (monetary or otherwise) to use the library. Other libraries are free, and have no restrictions.

In order for a software product to be legally compliant, it is important to know that all required licenses are paid. Sometimes the cost will be a monetary fee, other times it might be an inclusion, or recognition of the authors of the component being used in the product. Some open source software components require that any software using the component is also open source.

With a full list of all dependencies, it is possible to know if the software is compliant with all the licenses required to ship that software product.

Updates

Most code libraries are being updated on a regular basis. Software is never finished, merely abandoned. Software updates typically improve functionality, performance, fix bugs, and remove security issues.

A software product should aim to keep the components, upon which it depends, updated to reduce the risk of security flaws, and get the benefits of the latest updates.

A full list of components, used to create the software, is critical to understanding what needs updating, and deciding when to update.

The Document

A software bill of materials (SBOM) is a document that describes all the components that are used to create a software product. As the libraries being used by a software product will often also use other libraries, the software bill of materials document describes all the dependencies down the supply chain.

Also included in the SBOM document is the license information, and the version of the component being used. The industry standard for an SBOM document is spdx, more details can be found here https://spdx.dev/

A number of tools now exist to help manage and maintain the SBOM document. Ideally this would be created as part of the build in the Continuous Integration (CI) step of software production.

Microsoft has an open source project here https://github.com/microsoft/sbom-tool

FOSSA has a set of tools that can found on their website https://fossa.com/

Conclusion

Software is eating the world, is a statement made by Marc Andreessen in 2011. In 2023 this can be extended to the world is eating software, that is eating other software, that is eating the world.

The SBOM is your ingredients list. Would you buy, and eat, food that does not have an ingredients list? Why then do you use software that does not have an ingredients list?

Ended: Development

Coding ↵

Coding Notes

This section of Neil's notes contains notes on the topic of Coding.

Notes

- .NET development on a Raspberry Pi

- .NET Console Animations

- .NET Console Clock

- .NET Console Weather

- .NET Camera on a Raspberry Pi

- .NET Web Server on Raspberry Pi

- .NET Camera Server on Raspberry Pi

- .NET GUI application on Raspberry Pi with Avalonia

- .NET Picture Frame on Raspberry Pi with Avalonia

- .NET camera feed viewer on Raspberry Pi with Avalonia

- Python Glowbit server

- Azure Key Vault in .NET applications

- Using GitHub Packages with NuGet

Dr. Neil's Notes

Software > Coding

.NET Development on a Raspberry Pi

Introduction

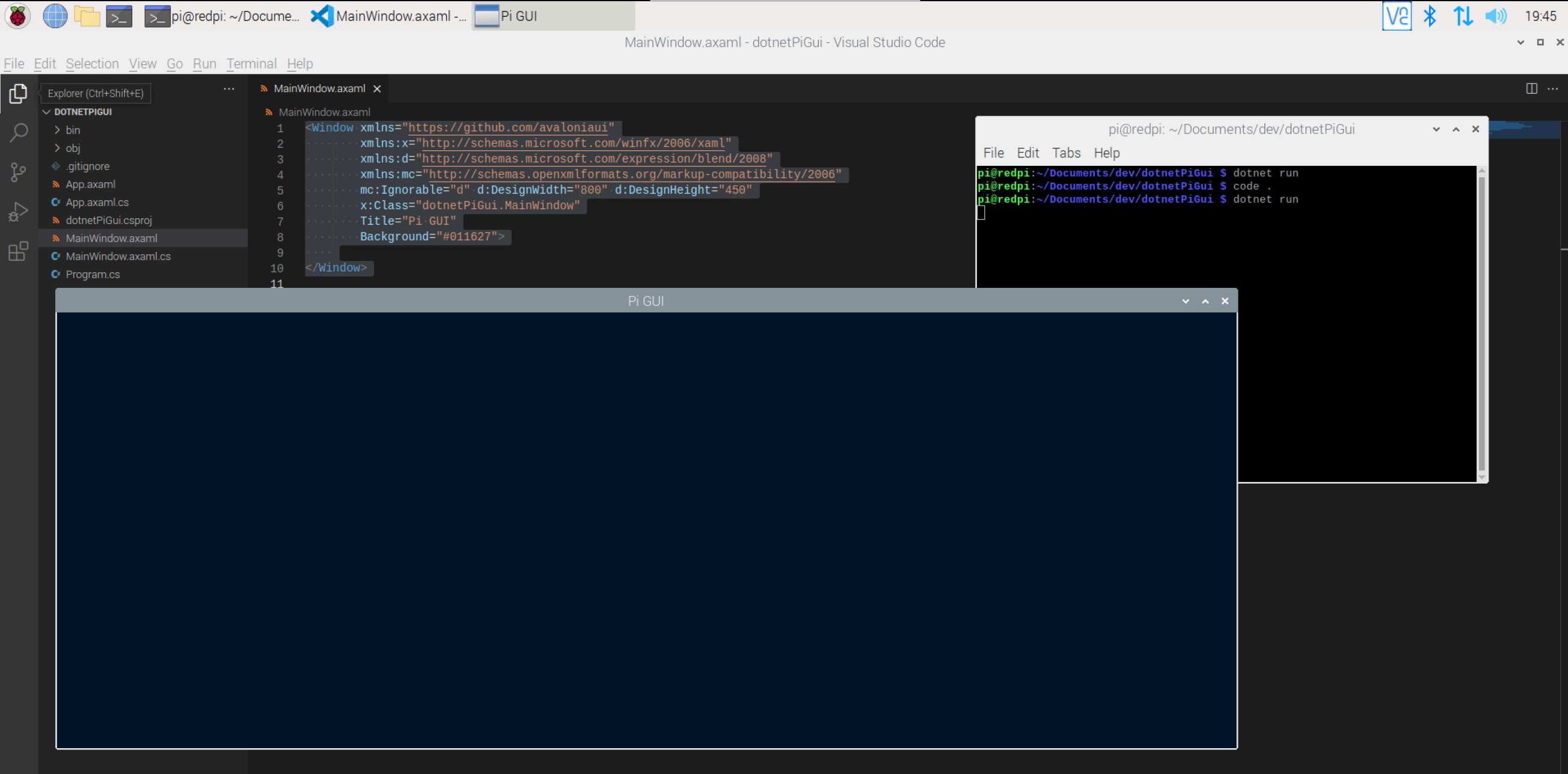

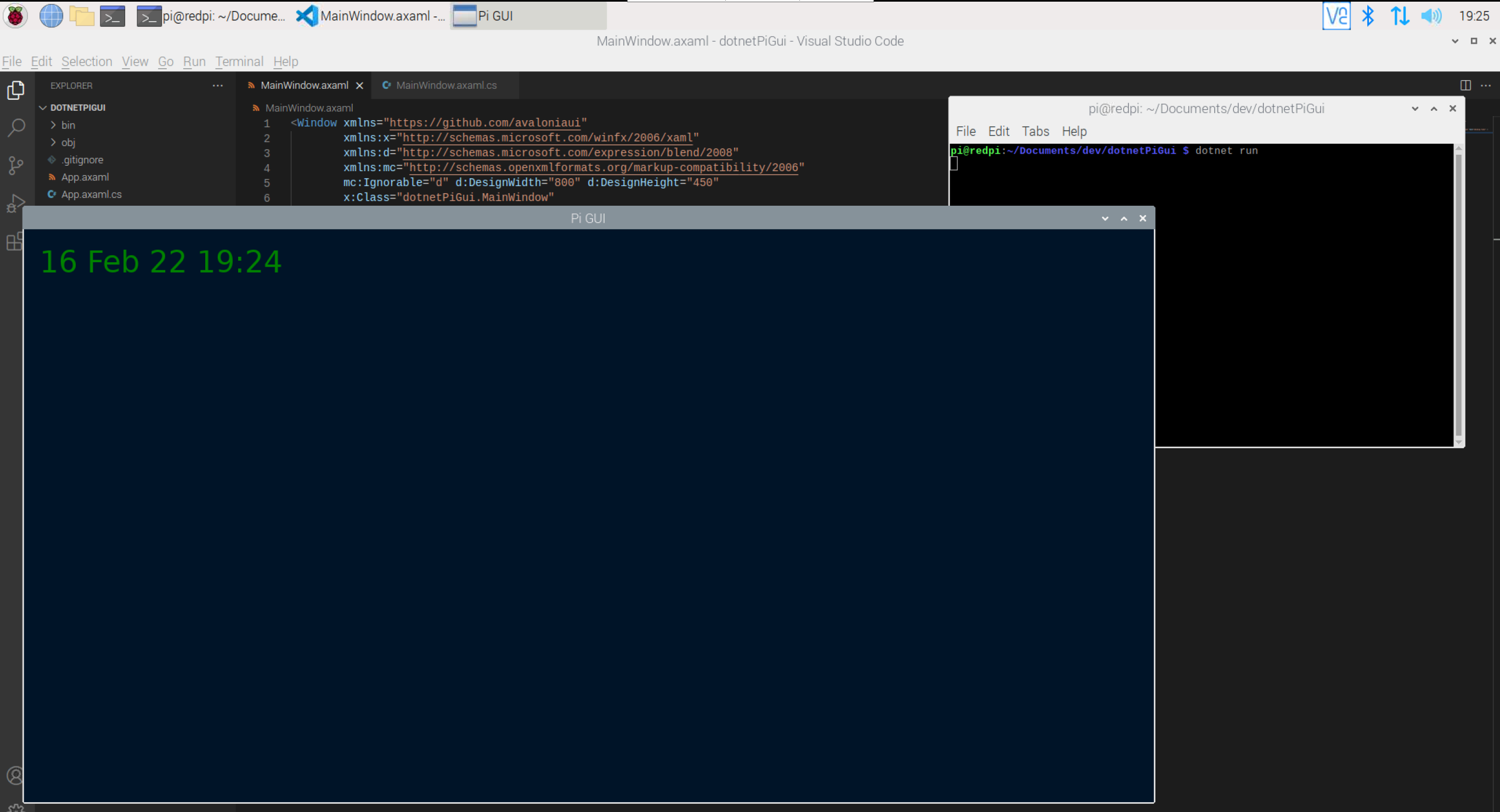

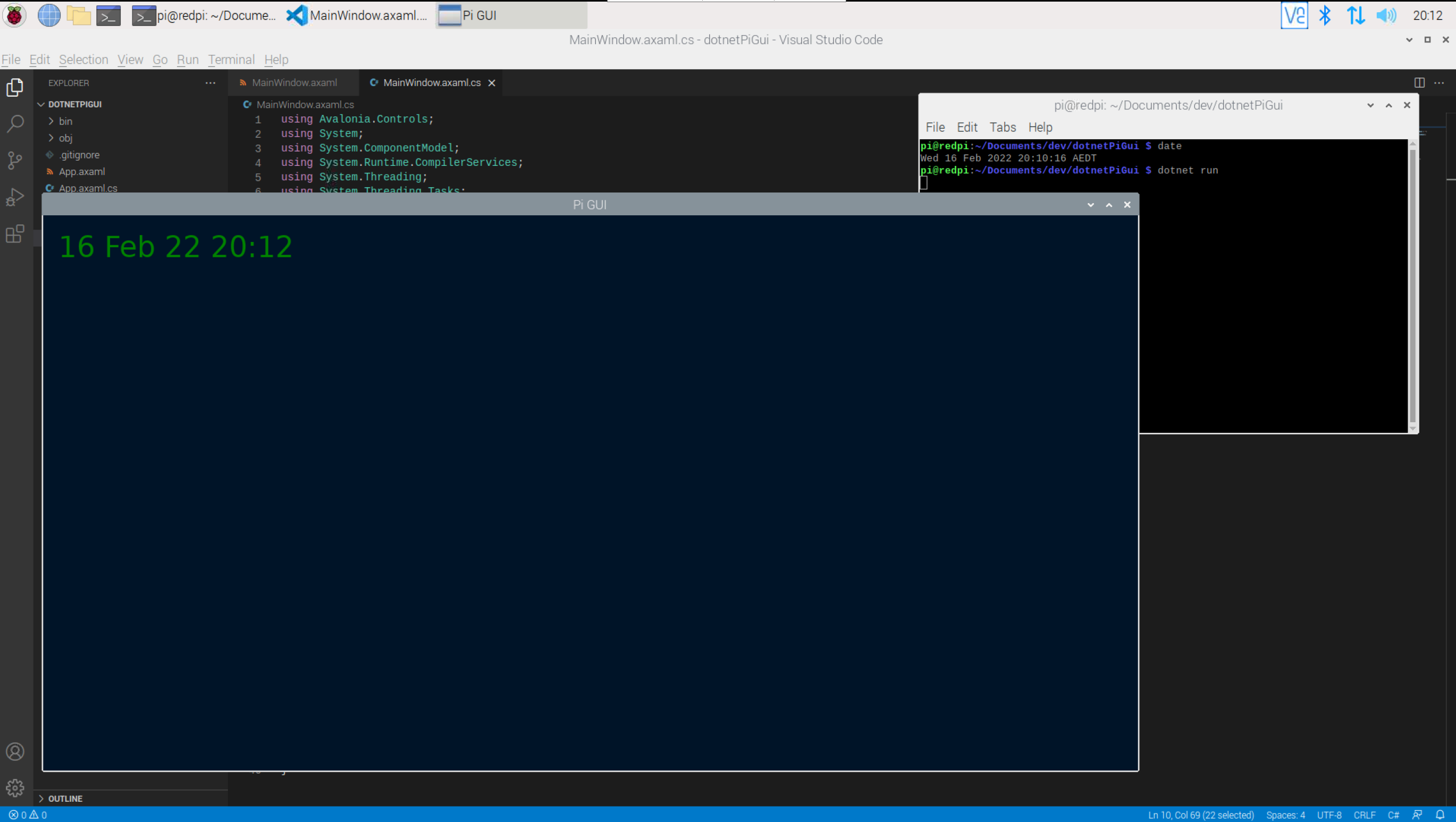

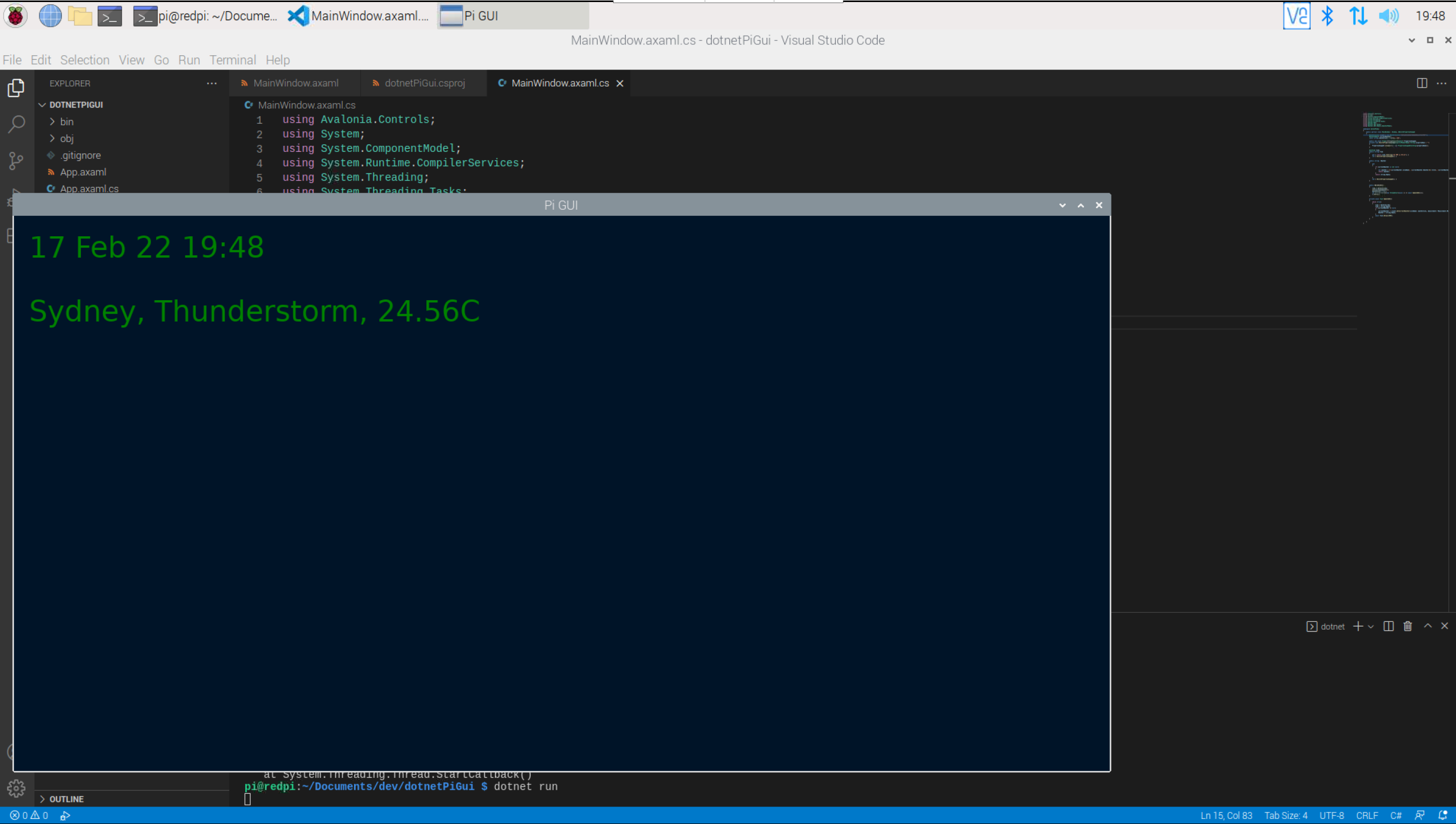

With the release of .NET 6, I thought it would be fun to try getting a Raspberry Pi working as a development machine. It was pretty straight forward and I will share the steps here to get going. I have decided to use my existing development machine to access the Raspberry Pi, saving the need for another screen, keyboard, or mouse.

A video that accompanies this Note can be found here

Get a new Raspberry Pi image setup

The quickest way to get started with a Raspberry Pi is to download the Raspberry Pi Imager from the Raspberry Pi software page https://www.raspberrypi.com/software/. Follow the instructions to download the app for Windows, Mac or Ubuntu and create an operating system image on an SD card for your Raspberry Pi.

If you are plugging a mouse, keyboard, and monitor into your Raspberry Pi you can skip forward to the section titled Install .NET on Raspberry Pi.

Headless Setup

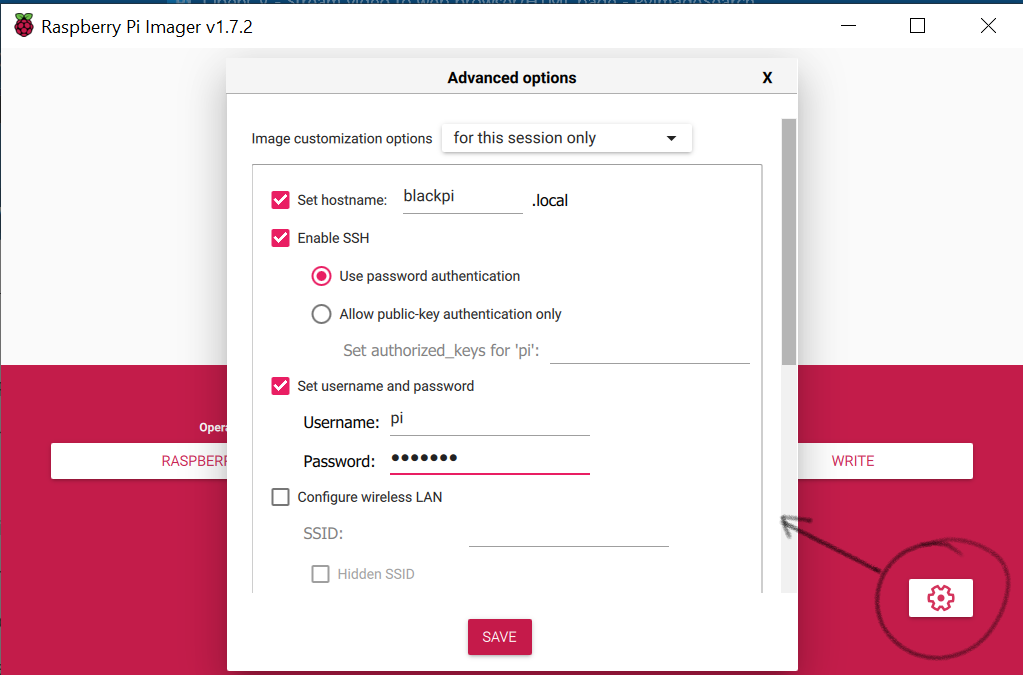

You can set up your Raspberry Pi to work without a keyboard, mouse or screen attached to the Raspberry Pi. Instead you can use your laptop or desktop computer to access your Raspberry Pi, this is called 'headless'. The latest Raspberry Pi Imager software makes this easier, and more secure with the Advanced options. Before starting to write the boot image to the MicroSD card, select the Advanced options, allow SSH, and set the username and password as shown.

The old way of setting up a headless Raspberry Pi

If you have an older Raspberry Pi image and want to enable headless mode then before you plug your micro SD card (the one you imaged with the Raspberry Pi OS in the previous step) you need to add one file to the root folder of the micro SD card.

Once the Micro SD card is imaged in the above step: 1. open the root folder on the SD card. 2. add a file named ssh to the folder. This file should not have any extension, and it does not need to contain anything.

This lets you access the Raspberry Pi with Secure Shell (SSH).

Plug in the Raspberry Pi

Insert the Micro SD card into the Raspberry Pi, and then plug in an ethernet cable (that is connected to your network) and attach the power cable. The Raspberry Pi should show flashing LEDs.

Get the IP address of your Raspberry Pi

In order to use SSH from your computer to access the Raspberry Pi you need the IP address of your Raspberry Pi.

To obtain the IP address: 1. plug in your Raspberry Pi as in the previous step, 2. open a terminal on your computer 3. use ping to find the IP address of your Raspberry Pi.

ping raspberrypi.local

NOTE: if you get a response from ping that the device could not be found, it could be that the Raspberry Pi is still setting things up. The first time you put a newly imaged SD card into the Raspberry Pi it can take a few minutes to complete setup and be ready to work with.

SSH to the Raspberry Pi

Now you can open a Secure Shell connection to the Raspberry Pi from your computer in a terminal.

Replace <ip address of your pi> with the ip address from the step above. You do not want the angle brackets.

ssh pi@<ip address of your pi>

It is also perfectly fine to use the name of the device for ssh.

ssh pi@raspberrypi.local

You will be prompted for the password for the pi user.

NOTE: if you used the Advanced options in the Raspberry Pi Imager, then the username and password will be set to the options specified in that step. You will not need to change the password in the next step.

The default user when you set up the first time is pi and the default password is raspberry

Change your password

If you are not prompted to change the password on first login then follow these steps.

In your terminal when you have connected to SSH, type

sudo raspi-config

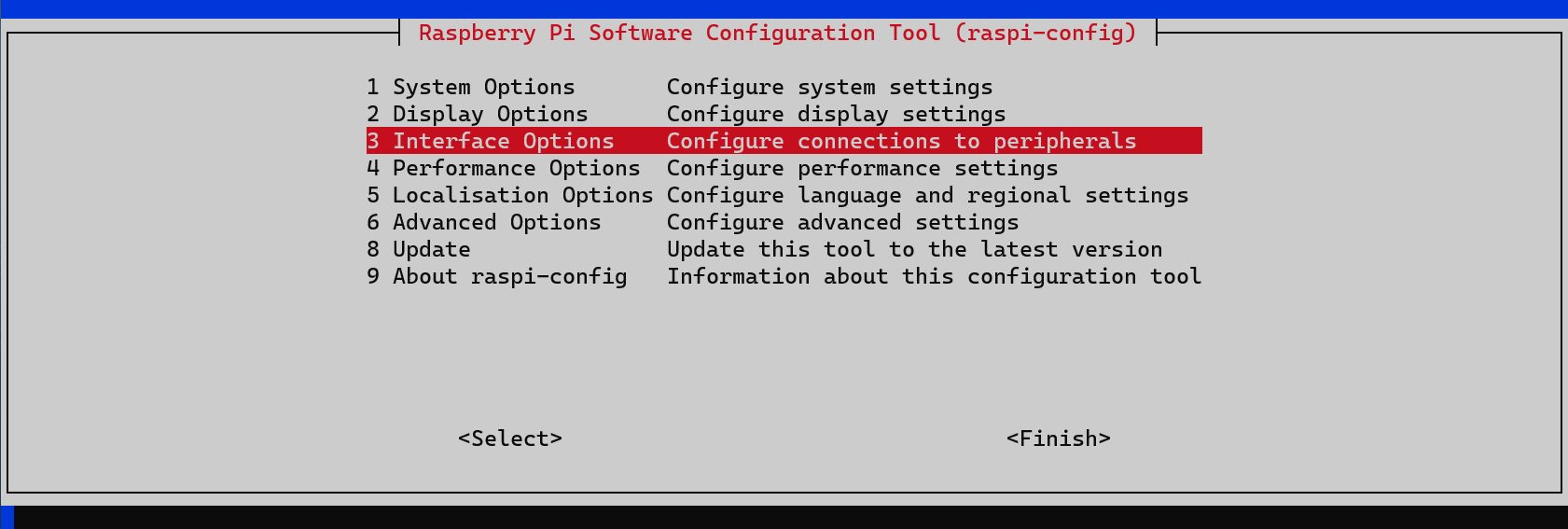

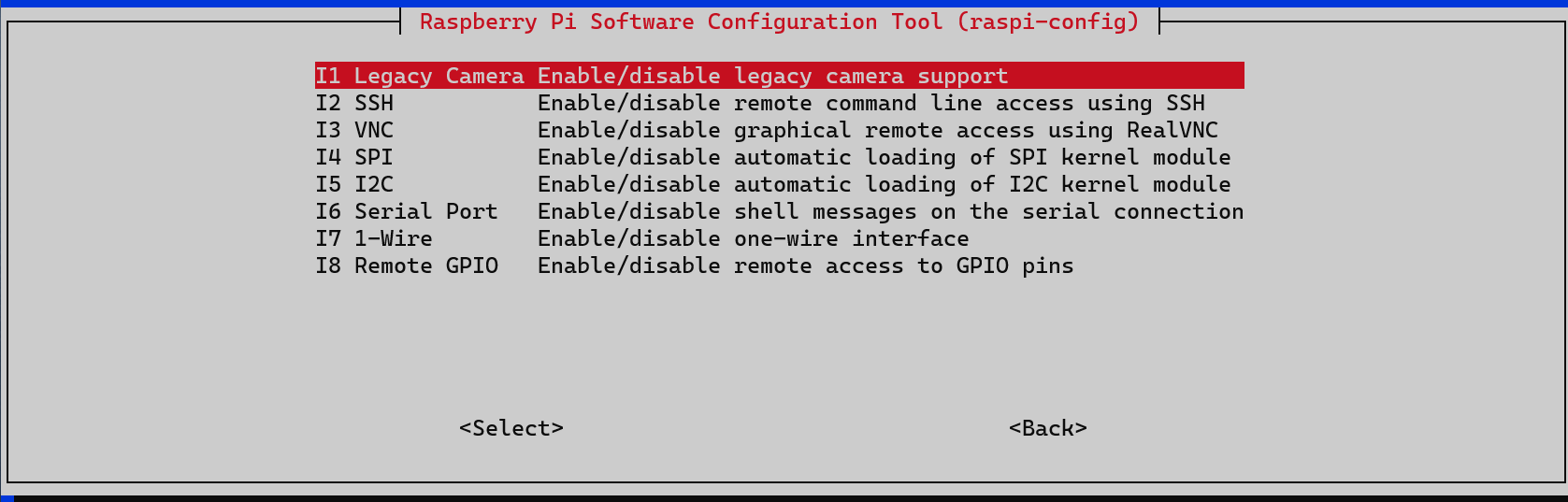

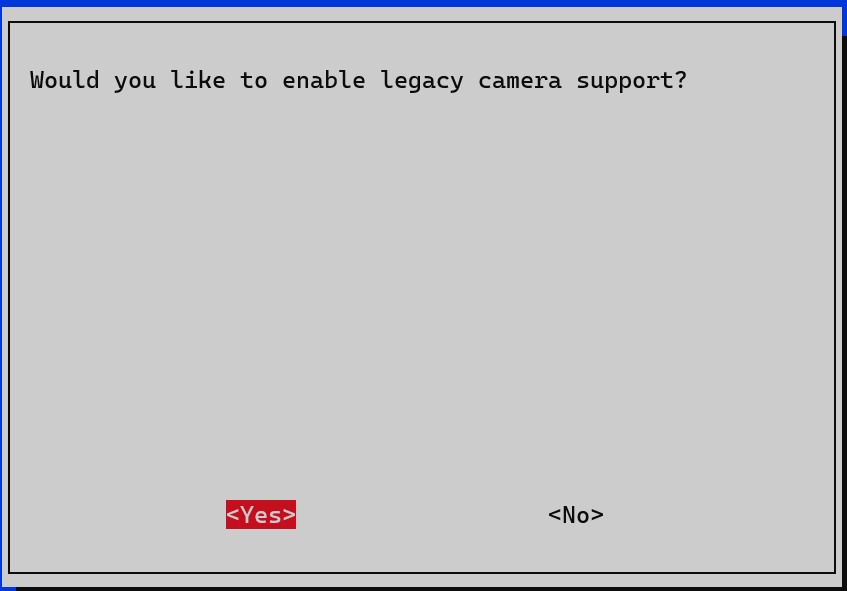

This will launch the config app in your terminal. Use the arrow keys to navigate the menu. 1. Navigate to System Options and press Enter 2. Navigate to Password and press Enter

You will be guided through changing your password.

Connecting with VNC

You can do most of your work from the terminal, however it is not as productive as having the full GUI Shell for doing development work. To access the graphical shell you can use VNC. To install VNC on your Raspberry Pi return to the SSH prompt in your terminal as described in the step above.

Return to the Raspberry Pi configuration. From the Terminal enter

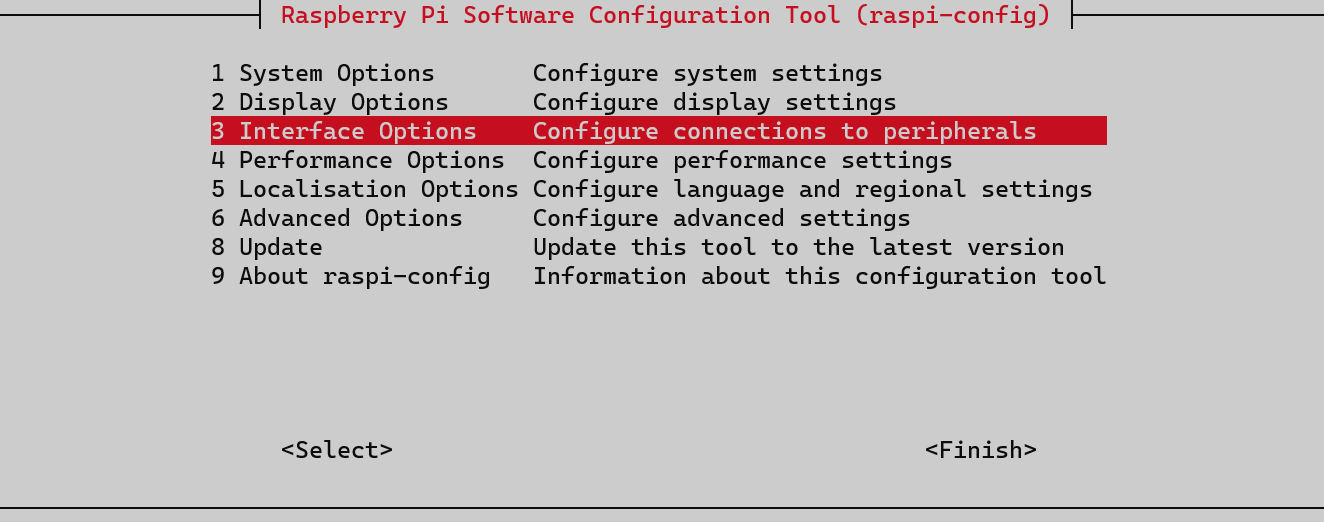

sudo raspi-config

The select Interface Options

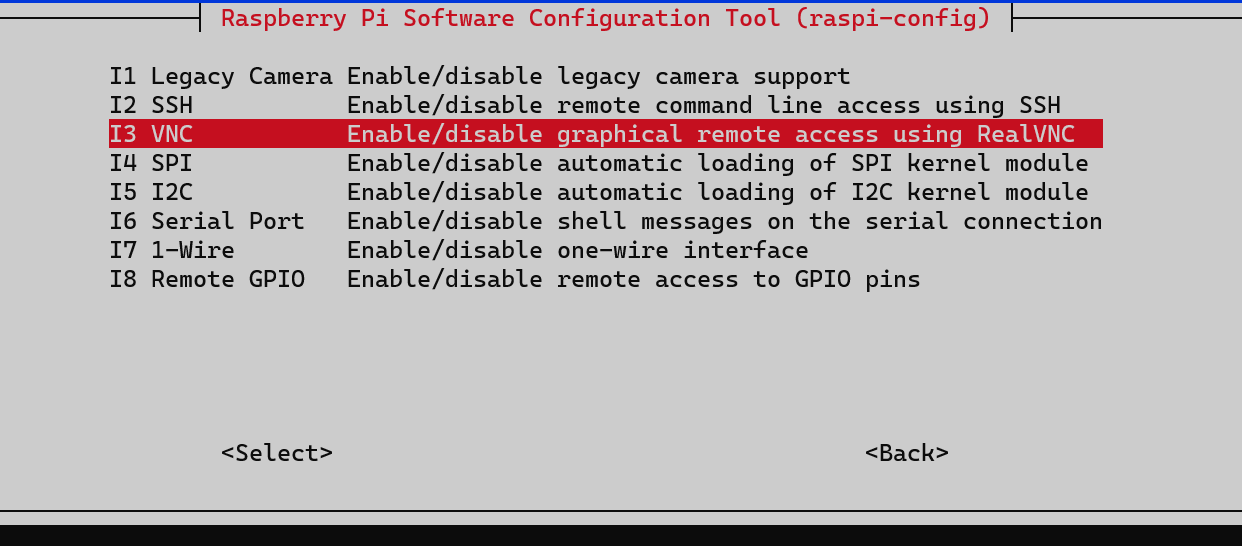

Then select VNC

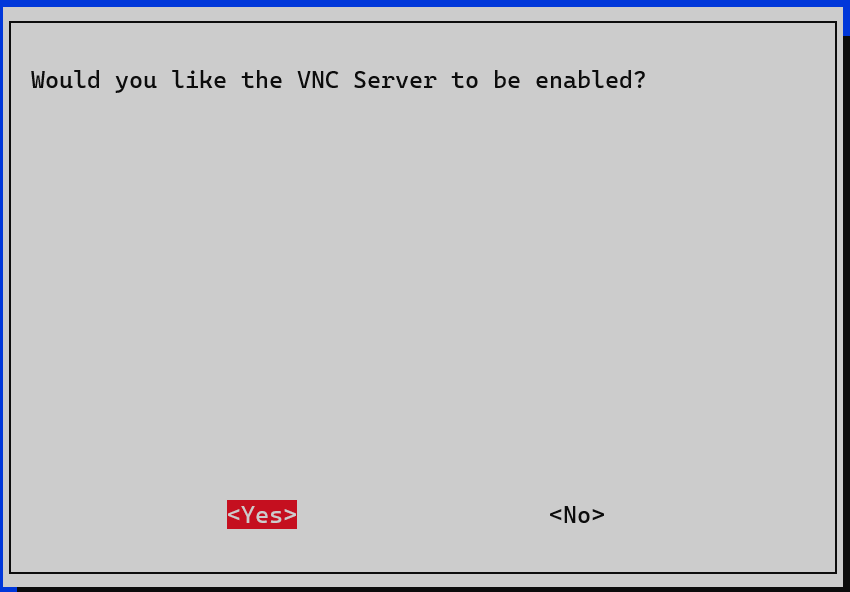

Now enable the VNC server on the Raspberry Pi

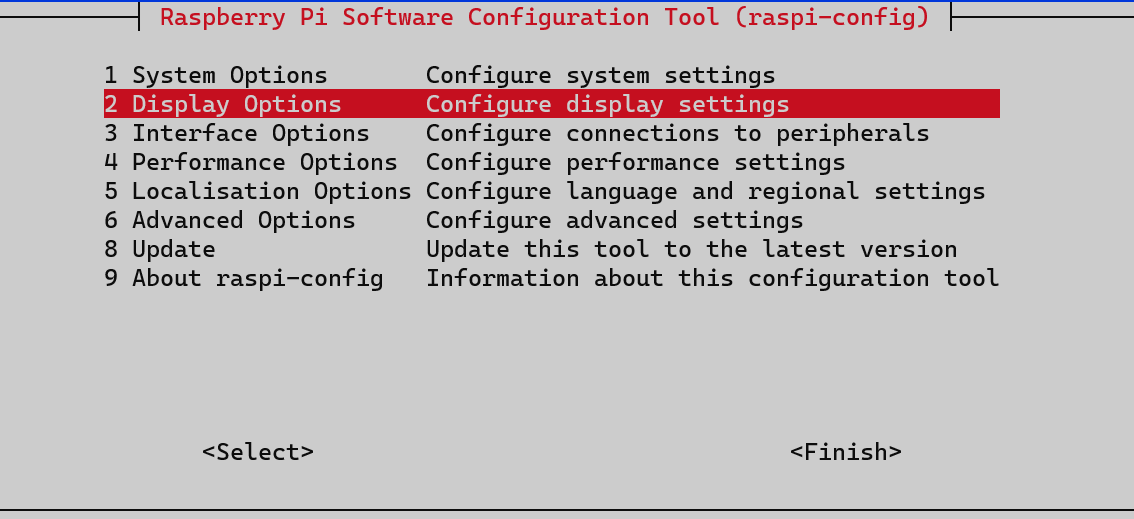

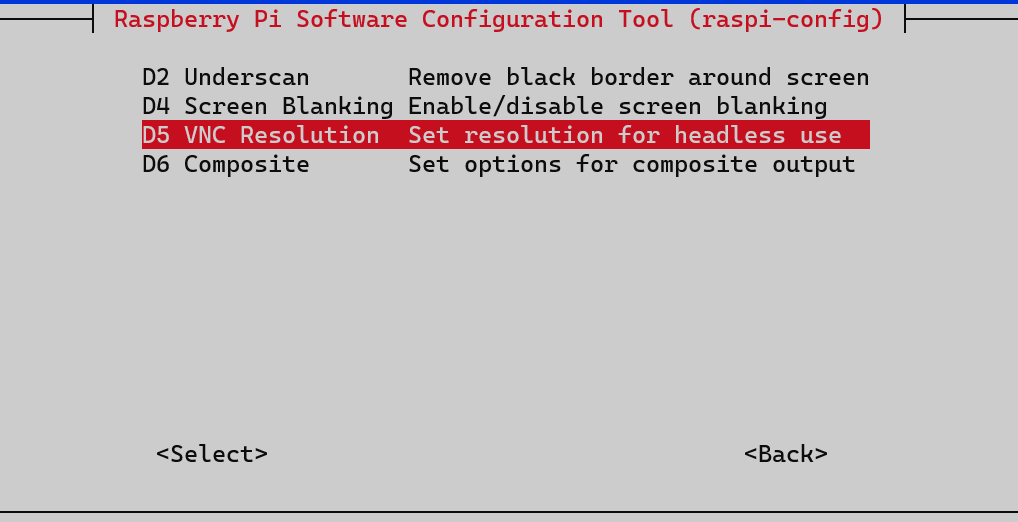

Then select the Display Options

Select VNC Resolution

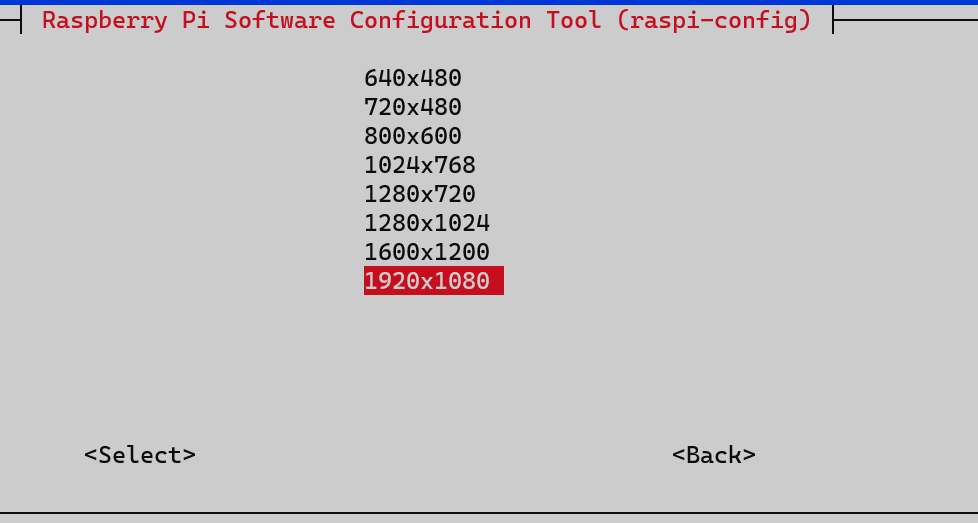

Then select a resolution that best suits your display

You will be asked to reboot the Raspberry Pi.

Download the VNC Viewer to your computer, there are versions for Windows, Mac, Linux, iOS, Android, and other operating systems including Raspberry Pi.

https://www.realvnc.com/connect/download/viewer/

When you have installed the VNC Viewer you can launch it and connect to your Raspberry Pi using the IP address of the Raspberry Pi

If this is a fresh install of the operating system then the Raspberry Pi desktop will show some notifications to guide you through setting your location, language, time zone, password, Wi-Fi, desktop, and update the software.

Install .NET on Raspberry Pi

Microsoft has some good instructions on deploying .NET to your Raspberry Pi. The following steps are taken from the Microsoft Docs.

In your terminal use curl to install the latest version of .NET (at the time of writing .NET 6).

curl -sSL https://dot.net/v1/dotnet-install.sh | bash /dev/stdin --channel Current

To make it easier for the Raspberry Pi to find the .NET libraries enter the following lines into your SSH terminal

echo 'export DOTNET_ROOT=$HOME/.dotnet' >> ~/.bashrc

echo 'export PATH=$PATH:$HOME/.dotnet' >> ~/.bashrc

source ~/.bashrc

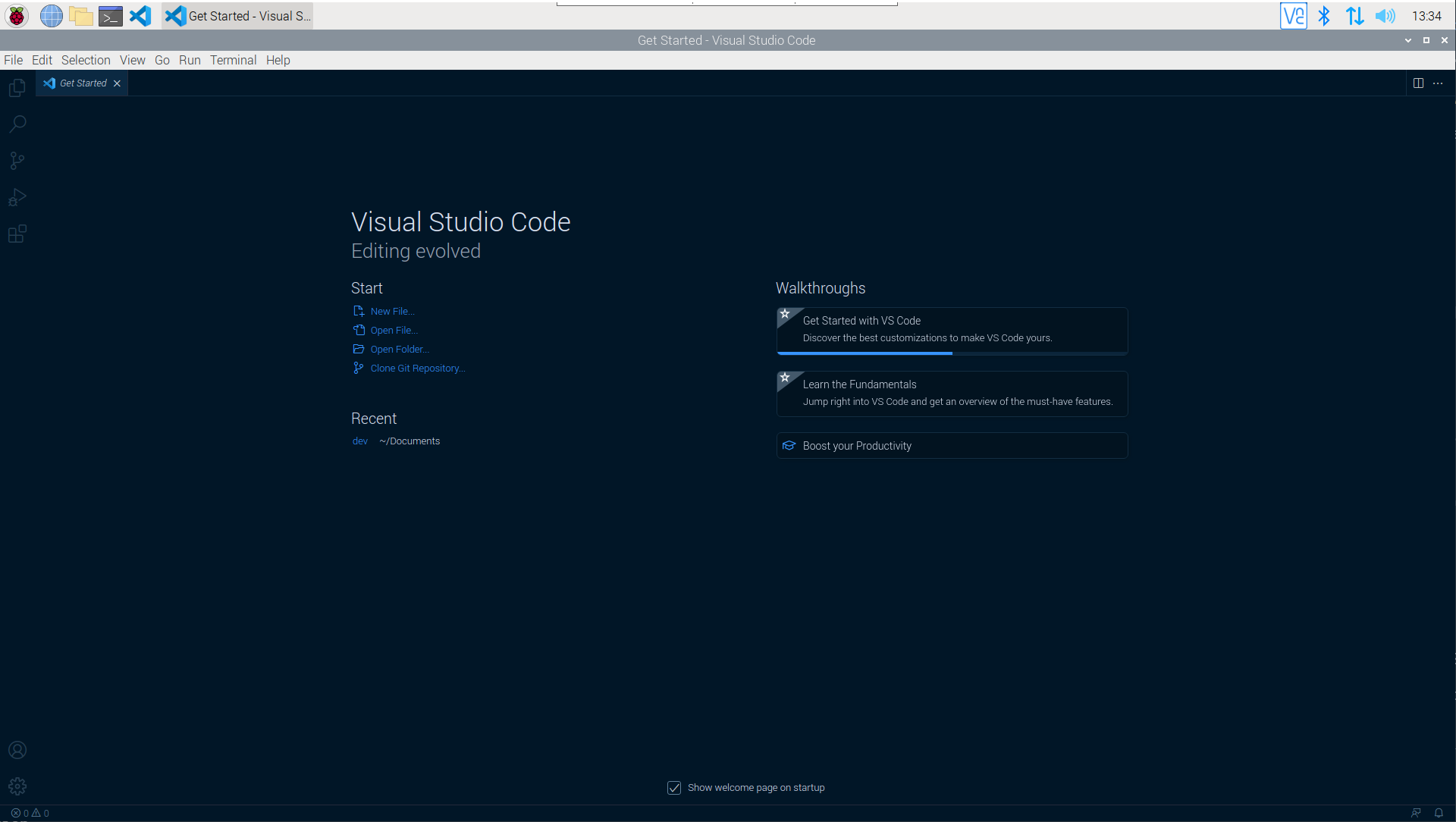

Install Visual Studio Code

Visual Studio Code is my editor of choice for working with code, to install Visual Studio Code on a Raspberry Pi, use the following command in your terminal.

sudo apt install code

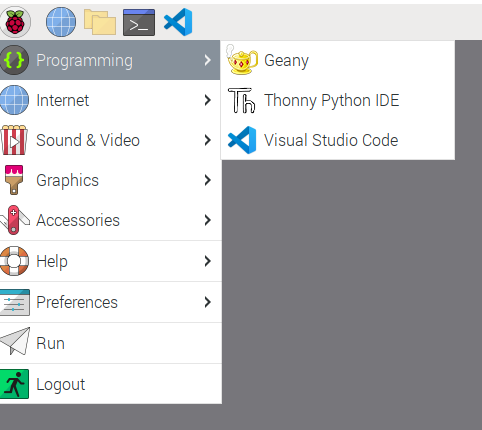

Go to your VNC Viewer and you should now have Visual Studio Code in your Programming menu

(You can see here I have also added VS Code to the applications in the menu bar)

Visual Studio Code can be launched and you are now ready to start developing some great .NET applications on your Raspberry Pi.

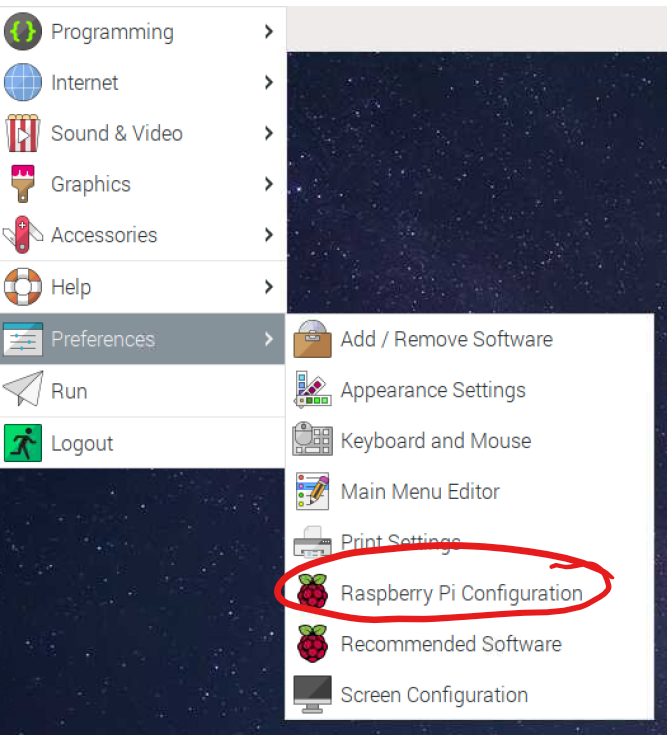

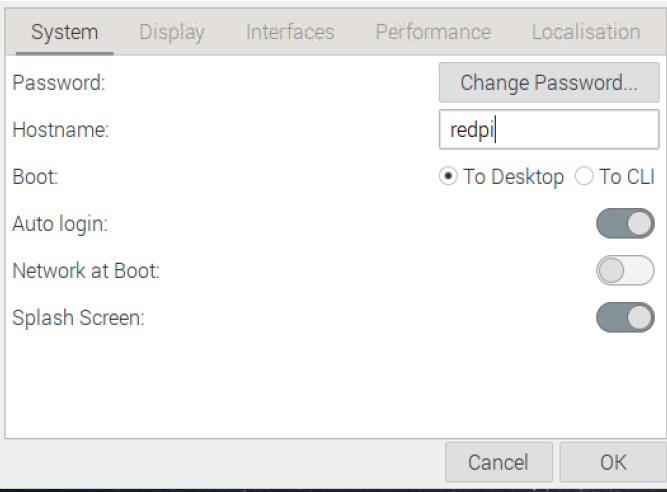

(Optional) Rename the Raspberry Pi

If there is more than one Raspberry Pi on the network then this step is important, if you only have one Raspberry Pi on the local network then you can skip this step.

To rename the Raspberry Pi, in the Raspberry Pi desktop select Preferences - Raspberry Pi Configuration

A dialog will appear, and the hostname can be changed from RaspberryPi to anything you like (it is best avoid spaces and non alphanumeric characters). Here I have named my Raspberry Pi redpi

Reboot the Raspberry Pi

At this point after having set up the Raspberry Pi and installed all the tools you need to develop .NET application, it is best to reboot the Raspberry Pi.

From the SSH terminal enter

sudo reboot

IMPORTANT if you renamed the Raspberry Pi in the previous step, then this is the name you should use to ping, and ssh to the device (instead of respberrypi).

Conclusion

This Note has explained how to get a Raspberry Pi ready to do .NET development. Included in this Note are the steps needed to use the Raspberry Pi without having a keyboard, mouse, or screen attached to the Raspberry Pi. If you have a computer already set up, then the work on the Raspberry Pi can all be done remotely from your computer, over the network.

(Optional and not recommended) Create new VNC desktops

Make sure you have the latest VNC software with the following command in your SSH terminal.

sudo apt install realvnc-vnc-server

Once the VNC Server is installed and enabled, you can run the VNCServer from the SSH terminal with this command, this also sets the screen resolution for a new virtual desktop, in this case I selected 1920x1080.

vncserver -geometry 1920x1080

The Terminal should output the address of the new VNC virtual desktop, for example. This address will be needed to connect to the desktop from your computer in the VNC client.

New desktop is raspberrypi:1 (198.161.11.151:1)

Dr. Neil's Notes

Software > Coding

.NET Console Animations

Introduction

After getting .NET 6 and Visual Studio Code running on a Raspberry Pi, I played around with some simple .NET 6 console code. The following are my notes on the console animations I created. These will work on any platform supported by .NET 6 (Windows, Mac, Linux). If you want to get a Raspberry Pi setup to run .NET code, follow the instructions in the .NET Development on a Raspberry Pi document. This document assumes you have installed .NET 6 and Visual Studio Code. This code will run on a Mac, Windows, Linux, and even a Raspberry Pi.

A video that accompanies this Note can be found here

Creating a new .NET project

Start by creating a folder for your code projects. I created a folder called dev. Open a Terminal session and navigate to where you want to create your folder (eg Documents) and enter

mkdir dev

This makes the directory dev

navigate to that directory

cd dev

then open Visual Studio Code. Note the 'dot' after the code, this tells Visual Studio Code to open the current folder.

code .

Your terminal entries should look something like this:

~ $ cd Documents/

~/Documents $ mkdir dev

~/Documents $ cd dev/

~/Documents/dev $ code .

~/Documents/dev $

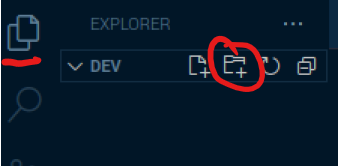

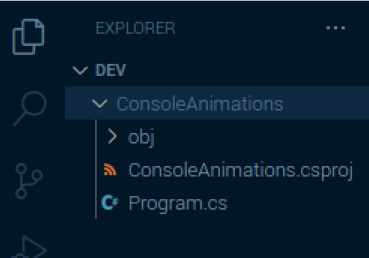

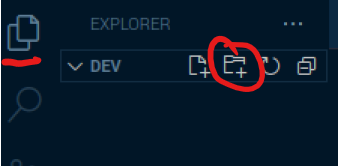

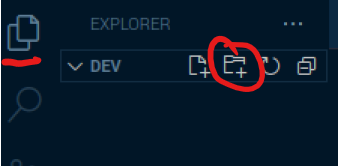

In Visual Studio Code create a new folder in your dev folder, call it ConsoleAnimations

Make sure you have the Explorer open (Ctrl+Shift+E), then click the New Folder icon, and name the new folder ConsoleAnimations

Open the Terminal window in Visual Studio Code, you can use the menu to select Terminal - New Terminal or press Ctrl+Shift+`

The Terminal will open along the bottom of your Visual Studio Code window and it will open in the folder you have opened with Visual Studio Code. In this case it will be your dev folder.

Change the directory to the new folder you just created.

cd ConsoleAnimations/

To create the .NET 6 console application use the command

dotnet new console

The default name of the new project is the name of the folder you are creating the project in. The output should look like this.

~/Documents/dev/ConsoleAnimations $ dotnet new console

The template "Console App" was created successfully.

Processing post-creation actions...

Running 'dotnet restore' on /home/pi/Documents/dev/ConsoleAnimations/ConsoleAnimations.csproj...

Determining projects to restore...

Restored /home/pi/Documents/dev/ConsoleAnimations/ConsoleAnimations.csproj (in 1.13 sec).

Restore succeeded.

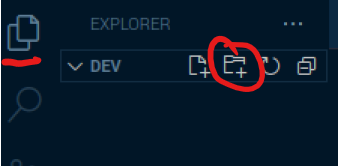

You should also notice that files have been created in the Explorer view of Visual Studio Code

You can run the new application from the Terminal window in Visual Studio Code with

dotnet run

This dotnet run command will compile the project code in the current folder and run it.

~/Documents/dev/ConsoleAnimations $ dotnet run

Hello, World!

As you can see it does not do much yet, other than output Hello, World!

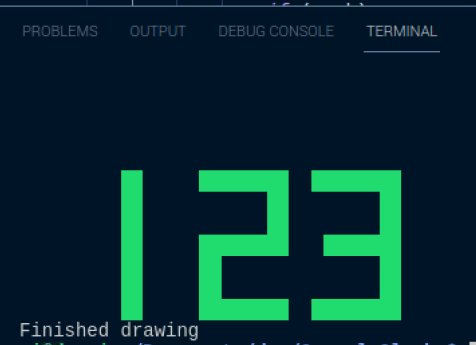

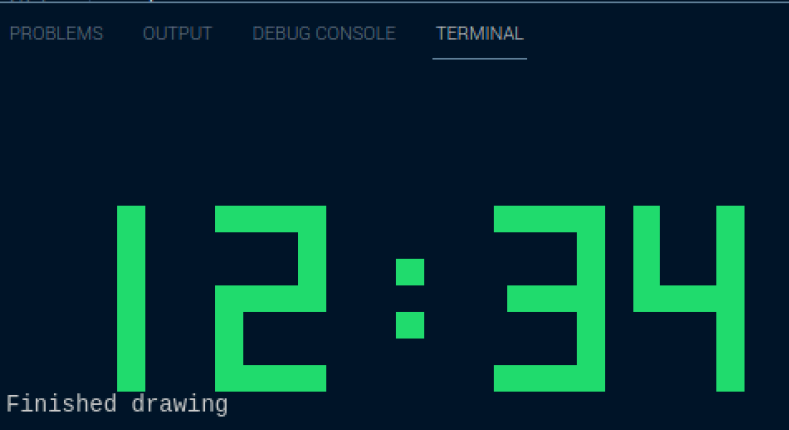

Creating a first animation

The first animation is a super simple spinning line, like you sometimes see when a console application is waiting for something to finish.

In Visual Studio Code, open the Program.cs file that was created with the project previously. You should see the file in the explorer (as seen in the image above). Click on the file to open it.

It has one line of code above which is a comment.

Console.WriteLine("Hello, World!");

Delete both lines, to leave you with an empty file.

Enter the following code into the file

string frames = @"/-\|";

Console.CursorVisible = false;

while (Console.KeyAvailable is false)

{

foreach(var c in frames)

{

Console.Write($"\b{c}");

await Task.Delay(300);

}

}

Console.WriteLine("Finished");

Console.CursorVisible = true;

In the Terminal window enter the dotnet run command again to compile and run the application.

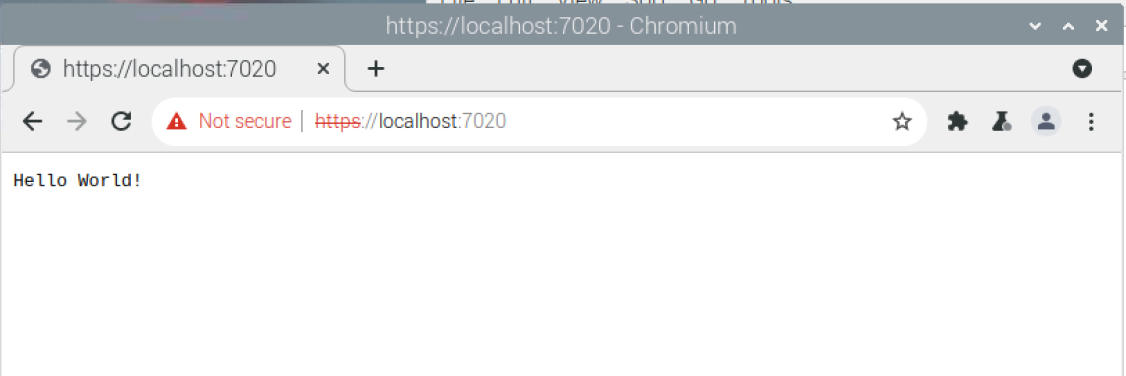

When the program runs, it will display a spinning line until you enter a key. You can press any key in the terminal to end the program.

Let's break down what this code is doing. The first line is defining a string, a collection of characters, named frames, as it represents the frames of the animation. The code will enumerate through each character, and display it over the previous character to create the animation.

Then the cursor for the console is hidden using Console.CursorVisible = false;. At the end of the program the cursor is made visible again.

The next line creates a loop that will run until a key is pressed in the console. Console.KeyAvailable will return true when a key has been pressed, and so it is checked that Console.KeyAvailable is false. While a key is not available, do everything in the brackets, again and again.

The foreach loop takes each character c in the string frames, and writes it out, proceeded by a backspace, the \b character is a backspace. After the output of each character the program waits for 300 milliseconds before continuing. The await Task.Delay method tells the program to sleep (or delay) before taking the next step.

When a key press is available, the while loop will finish and the program outputs that it has finished.

Creating an Animate method

To make code easier to manage it is broken down into components of functionality. In this step a method will be created to encapsulate the animation code. As this program grows you will see why this is useful.

Edit your program.cs file to create an Animate method as shown.

string frames = @"/-\|";

Console.CursorVisible = false;

await Animate(frames);

Console.WriteLine("Finished");

Console.CursorVisible = true;

async Task Animate(string frames)

{

while (Console.KeyAvailable is false)

{

foreach(var c in frames)

{

Console.Write($"\b{c}");

await Task.Delay(300);

}

}

}

In the Terminal window enter the dotnet run command again to compile and run the application.

When the program runs, it will display the same spinning line until you enter a key.

In the code changes the while loop has been moved into a method called Animate. This Animate method takes a parameter of type string. The string is used to define the frames to animate.

The async Task at the start of the method tells the compiler and runtime that this method can run on a different thread. This means the Animate method could be called and then code could continue running afterwards. The await is used when calling the Animate method to tell the runtime to wait until the method has completed before continuing to run the code.

This process of taking some existing code and restructuring the code without changing the behaviour is called refactoring.

Animating multiple lines

In this step the animation will go beyond a single character to multiple lines. Each line will still only have a single character at this point. This will be extended further in following steps.

Edit the program.cs file to support two lines for animations, as follows:

string[] frames = new string[]{@"/-\|", @"._._"};

Console.CursorVisible = false;

await Animate(frames);

Console.WriteLine("Finished");

Console.CursorVisible = true;

async Task Animate(string[] frames)

{

Console.Clear();

int length = frames[0].Length;

while (Console.KeyAvailable is false)

{

for(int i = 0; i < length; i++)

{

foreach(var f in frames)

{

Console.WriteLine(f[i]);

}

await Task.Delay(300);

Console.CursorTop = 0;

}

}

}

In the Terminal window, enter the dotnet run command again to compile and run the application.

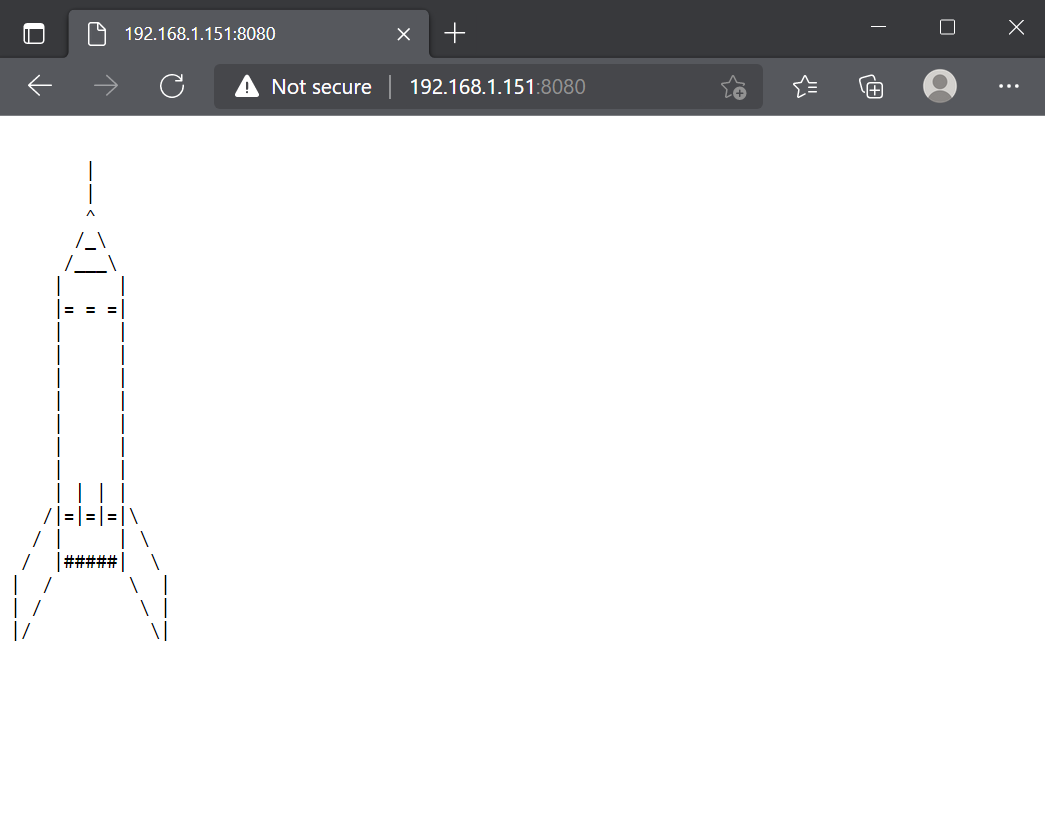

When the program runs, it will clear the terminal window, then display two lines, the top line has the same spinning line as before, the line below will show a dot/line transition, you might see it as a shrinking line, or a growing dot.

There are quite a few code changes in this step.

The frames string is now an array of strings, the [] notation after the variable type tells the compiler this is not a single string, it is a collection of strings. In maths you might call this a single dimensional array. In programming it is also called an array.

The Array is initialized with two strings, the first is the string used so far in this code, the second is a string of the same length that will define the frames to animate on the second line.

The Animate method has also changed the parameters to now take an array of string rather than a single string.

The first line of the method now clears the console (or terminal) window, of all contents. This provides the canvas for the animation to be displayed in the console.

A new integer (number) variable is set to the length of the first string in the collection of strings passed into the method with int length = frames[0].Length;

This code assumes all the strings in the collection are the same length, which is true. If we extended this further we might like to change this to use a parameter to set the length of the string, as making assumptions in code is never a good idea.

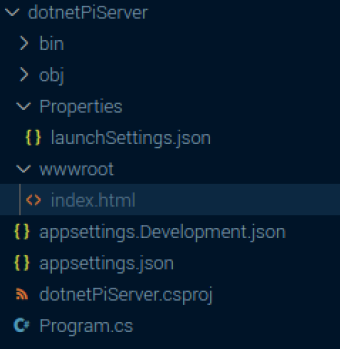

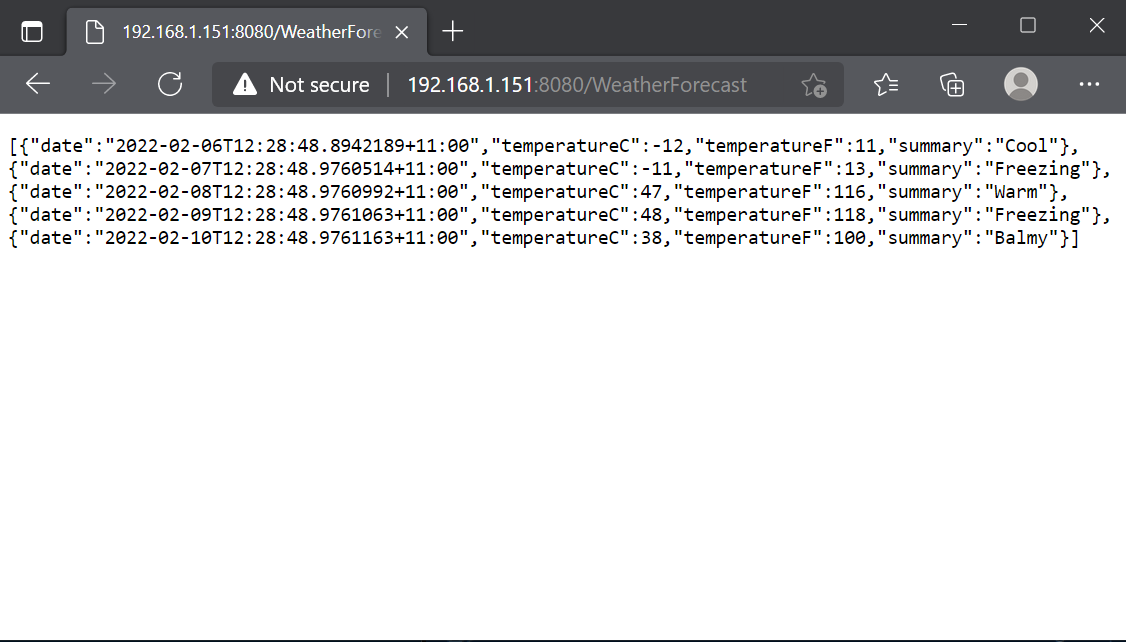

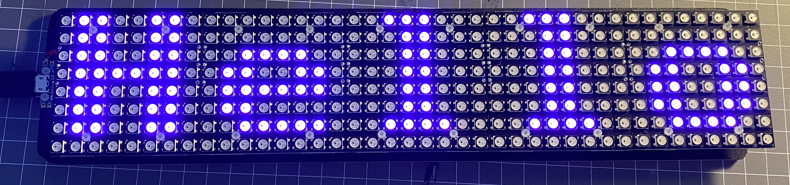

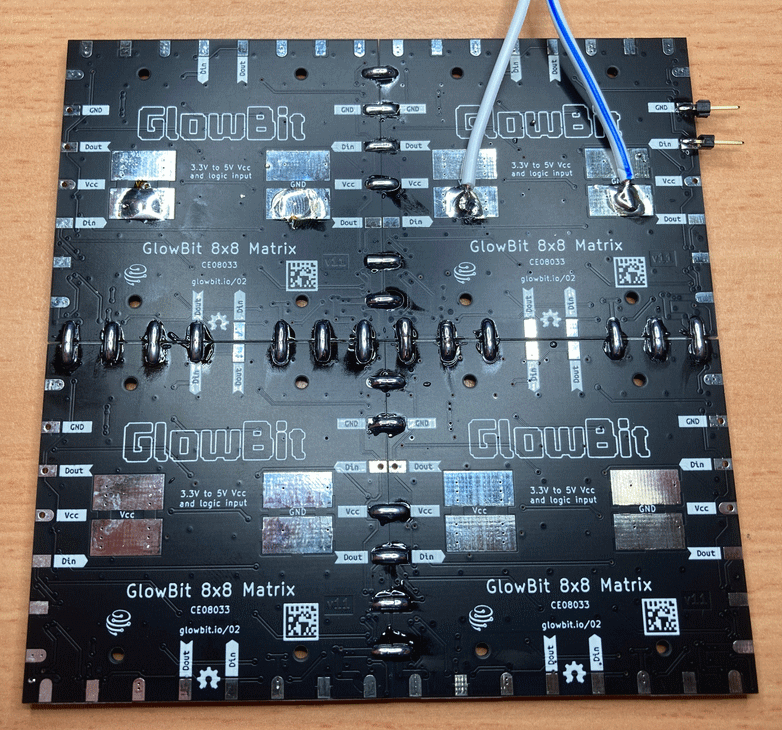

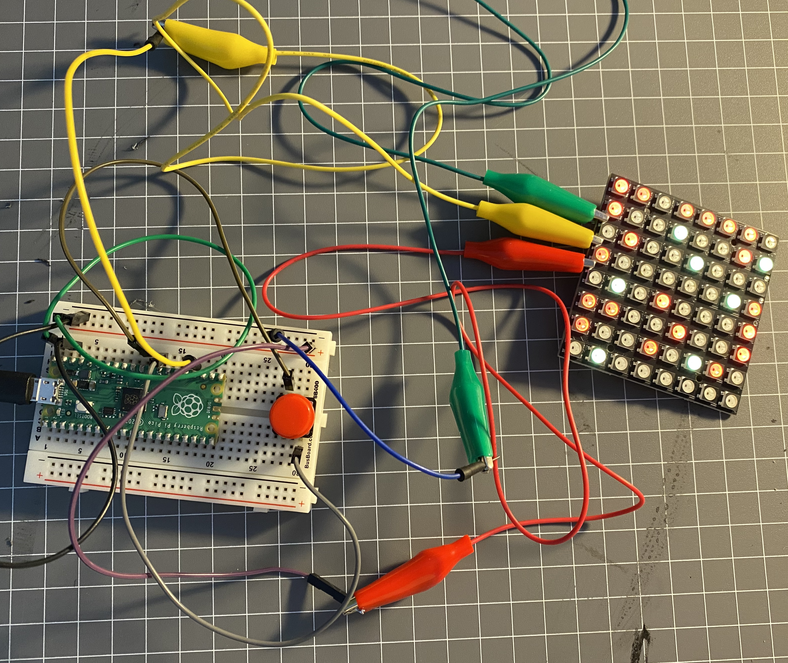

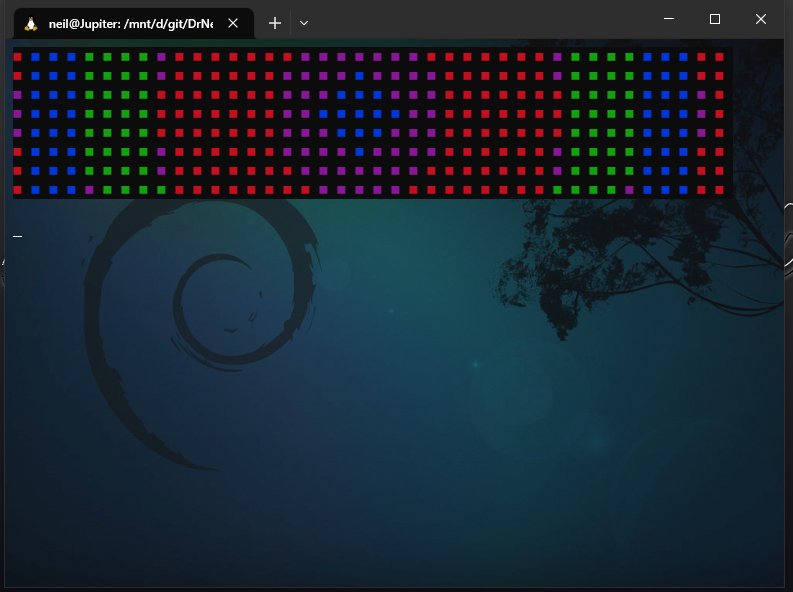

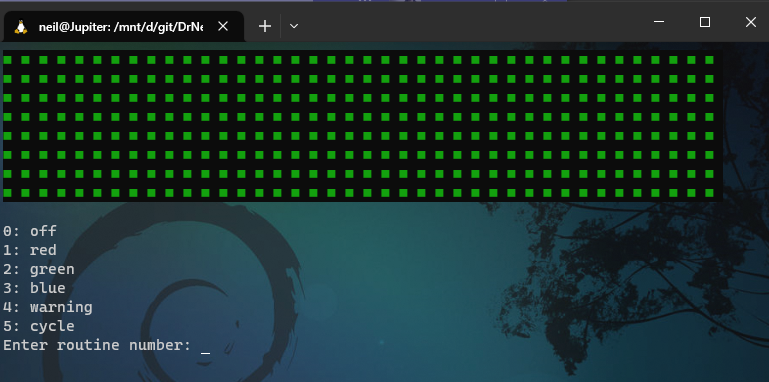

Inside the while loop we now have a new for loop, for(int i = 0; i < length; i++) This will count the variable i from 0 to 3, The length of the string is 4 characters, however the escape clause in the for loop is to stop when i is no longer less than the length i < length;, and 3 is the last number that is less than 4.